5 Best & Open-source Claude Cowork Alternatives

Compare the best open-source Claude Cowork alternatives. Find self-hosted desktop agents for local task automation and secure file management.

Claude Cowork launched in January 2026 as Anthropic’s first true desktop AI agent. Unlike a traditional chatbot, it can plan tasks, access local folders, create and edit files, and execute multi-step workflows with minimal supervision.

However, Claude Cowork also comes with clear limitations. It is currently a research preview, restricted to macOS, and available only to Claude Pro or Max subscribers. Pricing ranges from approximately $20 to $200 per month. The closed-source nature of the product and the tight platform lock-in further limit flexibility for advanced users, teams, and privacy-conscious workflows.

As a result, many users are now searching for open-source Claude Cowork alternatives that offer similar agent-style capabilities without subscription pressure or operating system restrictions. Common requirements include local or self-hosted execution, transparent permission control, flexible model selection, and full ownership of data and workflows.

This article compares the best open-source Claude Cowork alternatives available today. Each project supports autonomous task execution, filesystem access, and multi-step planning, while prioritizing user control, extensibility, and local-first operation. These options allow you to choose your own models, manage data residency, and customize deployment strategies without relying on Anthropic’s proprietary platform.

TL;DR

| Name | Core Backend | Best For |

|---|---|---|

| OpenWork (Different AI) | OpenCode | Developers requiring extensibility |

| Open Claude Cowork (DevAgentForge) | Claude Code / MiniMax | Users wanting a direct GUI clone |

| OpenWork (Accomplish) | Ollama / OpenAI / Anthropic | Privacy & local model users |

| Open Claude Cowork (Composio) | Composio / Claude SDK | Heavy SaaS tool integration |

| Hello-Halo | Claude Code SDK / Any API | General users & remote control |

Top 5 Open-source Claude Cowork Alternatives

Table Of Contents

1. OpenWork (Different AI)

Best for: Development teams deploying agent systems on remote servers with skill-based workflow management.

Overview:

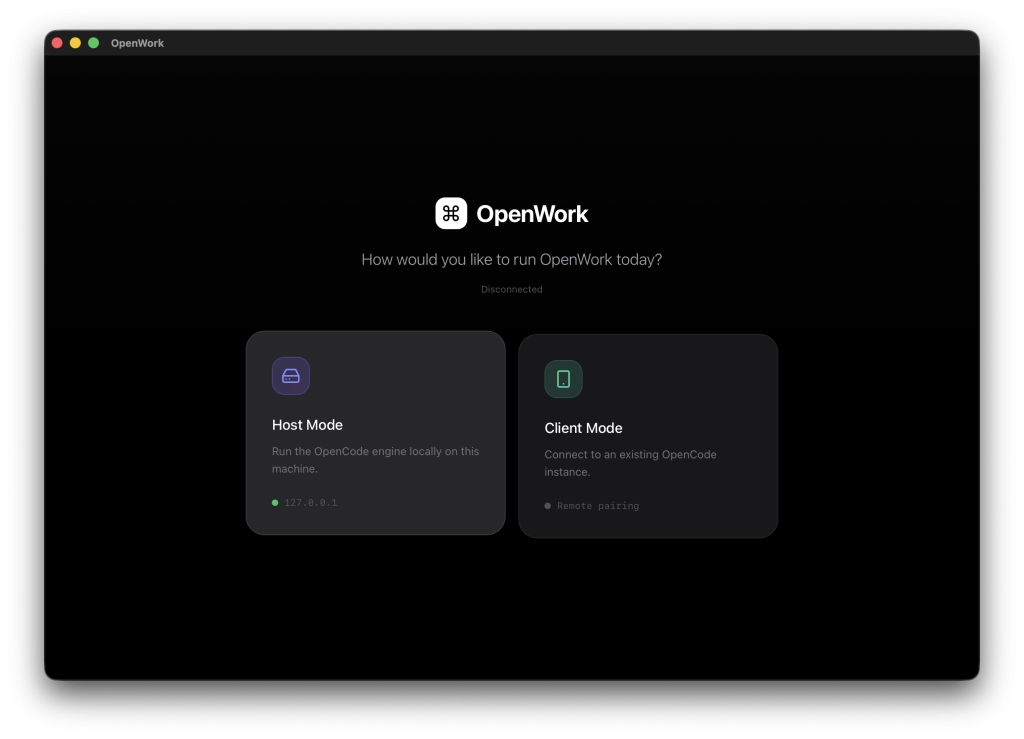

OpenWork presents a dual-mode architecture supporting both local host execution and remote client connections. Host mode spawns OpenCode servers with user-selected project folders as working directories. The interface connects via the OpenCode SDK v2 client, subscribing to server-sent events for real-time updates.

The skill management system differentiates OpenWork from basic agent wrappers. Install skills from OpenPackage repositories, import local skill folders into .opencode/skill directories, and define repeatable workflows as templates. The permission framework surfaces privileged operation requests through UI dialogs before execution.

Execution plan rendering displays OpenCode todos as timeline visualizations. Users track progress across parallel subtasks, monitor resource consumption, and intervene when plans require adjustment.

Features:

- Host and client modes supporting local and remote OpenCode deployments

- Skill manager integrating with OpenPackage plugin ecosystem

- Template system for saving and reusing common workflows

- Folder picker using Tauri dialog plugin for secure access control

- Server-sent events subscription for real-time execution updates

Deployment & Requirements:

Installation requires Node.js with pnpm and Rust toolchain for Tauri compilation. OpenCode CLI must be available on system PATH. Development mode runs via pnpm dev, production builds target desktop platforms through pnpm build:web.

Remote server deployments configure hostname and port parameters. Client mode connects to existing OpenCode instances by URL, allowing distributed team access to shared agent infrastructure.

Advantages Over Cowork:

Remote server architecture enables team collaboration without individual desktop deployments. Plugin system extends capabilities through OpenCode’s native extension mechanism. Template sharing creates reusable workflows across projects.

Limitations Compared to Claude Cowork:

Developer-focused architecture requires technical configuration. No native macOS app distribution. Setup complexity exceeds single-binary installations.

Pros:

- Extensible through OpenCode plugin ecosystem

- Remote deployment supports distributed teams

- Auditable execution logs for compliance workflows

- Permission system for privileged operation control

Cons:

- Requires Rust toolchain for building from source

- Complex setup process compared to packaged applications

- No pre-built binaries for quick installation

Website / Repository: https://github.com/different-ai/openwork

2. Open Claude Cowork (DevAgentForge)

Best for: macOS and Linux users who want Claude Agent SDK functionality without terminal interaction.

Overview:

DevAgentForge created a desktop application that wraps Claude Agent SDK in a native interface. The tool reuses existing Claude Code configuration files, providing compatibility with any model that works through Anthropic-compatible APIs. You can create sessions with custom working directories, view streaming token output with syntax highlighting, and manage tool permissions through interactive approval dialogs.

The architecture separates concerns through Electron layers. The main process handles session lifecycle and SQLite storage. The renderer displays markdown with syntax-highlighted code blocks and real-time tool call visualization. The backend integrates directly with the Claude Agent SDK without modification.

Features:

- Session management with custom working directories per task

- Token-by-token streaming output with markdown rendering

- Tool permission control requiring explicit approval for sensitive operations

- Full compatibility with existing

~/.claude/settings.jsonconfiguration - SQLite-based conversation history with safe deletion

Deployment & Requirements:

Local installation requires Bun or Node.js 18+. The Claude Code CLI must be installed and authenticated before running Open Claude Cowork. You can download pre-built binaries for macOS Apple Silicon, macOS Intel, Windows, or Linux distributions. The application reads API keys and model configurations from the standard Claude Code settings file.

Hardware requirements match Claude Code specifications. Apple M1/M2/M3 builds run on Apple Silicon. Intel builds support x64 processors. Memory consumption scales with model context windows and concurrent tool executions.

Advantages Over Cowork:

Cross-platform support extends beyond macOS limitations. Linux compatibility addresses server deployment scenarios. Windows builds remove OS restrictions entirely. Model flexibility allows using any Anthropic-compatible API endpoint, including local Claude Code deployments or proxy services.

Limitations Compared to Claude Cowork:

GUI features remain minimal compared to Anthropic’s interface. No built-in connector ecosystem for external services. Skill templates require manual implementation. Browser automation needs separate tooling.

Pros:

- Zero configuration required beyond the existing Claude Code setup

- Complete conversation history stored locally

- The permission system prevents unexpected file modifications

- Active development with regular updates

Cons:

- Requires Claude Code installation as a prerequisite

- Limited visual file preview capabilities

- No integrated browser automation

- Session management lacks cloud synchronization

Website / Repository: https://github.com/DevAgentForge/Claude-Cowork

3. Openwork (Accomplish)

Best for: Users wanting model provider flexibility with local-first privacy guarantees.

Overview:

Accomplish.ai built Openwork around provider-agnostic architecture. The system supports OpenAI, Anthropic, Google, xAI, and Ollama models through a unified interface. You can select providers at runtime, switching between cloud APIs and local Ollama deployments without reconfiguration.

The desktop application runs agent workflows locally, spawning OpenCode processes through node-pty for task execution. API keys store securely in OS keychains rather than configuration files. Folder permissions grant workspace access on a per-task basis.

Automation capabilities extend beyond basic file operations. The system handles document creation with format conversion, file management with content-based organization, tool connections through local API integrations, and custom skill definitions for repeatable tasks.

Features:

- Multi-provider model support, including OpenAI, Anthropic, Google, xAI, Ollama

- Local-first architecture with no cloud dependencies

- Folder-based permission system for controlled filesystem access

- Skill learning for custom automation workflows

- Browser workflow automation through local API integrations

Deployment & Requirements:

macOS Apple Silicon support ships as DMG installers. Windows support remains in development. Installation requires no prerequisites beyond downloading and mounting the disk image. The application bundles all dependencies, including OpenCode runtime.

Model selection affects deployment requirements. Cloud providers need API keys configured through the settings interface. Ollama deployments require local Ollama installation with desired models pulled.

Advantages Over Cowork:

Model flexibility removes vendor lock-in. Ollama support eliminates API costs for users running local inference. No subscription requirements reduce long-term operational expenses. Open source codebase allows customization.

Limitations Compared to Claude Cowork:

macOS-only deployment matches Cowork’s platform restriction. No connector ecosystem for external services. Skill system requires manual configuration. Browser automation limited compared to Anthropic’s Chrome integration.

Pros:

- No subscription costs beyond model API usage

- Ollama integration for completely local operation

Cons:

- Requires API key management across providers

- No cloud synchronization of sessions

Website / Repository: https://github.com/accomplish-ai/openwork

4. Open Claude Cowork (Composio)

Best for: Users requiring extensive external tool integration through Composio’s 500+ tool ecosystem.

Overview:

Composio developed an Electron application bridging Claude Agent SDK and Opencode SDK with their Tool Router platform. The architecture supports provider switching at runtime. Claude mode leverages Anthropic’s agent capabilities. Opencode mode routes to GPT-5, Grok, GLM, MiniMax, and other providers through unified SDK interfaces.

Tool Router integration provides authenticated access to Gmail, Slack, GitHub, Google Drive, and 500+ additional services. MCP server configuration loads from server/opencode.json, establishing connections to Composio backend infrastructure. Tool calls stream in real-time with input and output visualization in the sidebar.

Session management differs by provider. Claude mode uses SDK session tracking. Opencode mode maintains conversation state through event-based architecture. Both approaches support multi-turn conversations with full context retention.

Features:

- Dual-provider architecture supporting Claude Agent SDK and Opencode SDK

- Composio Tool Router access to 500+ external services

- Real-time tool call visualization with input/output display

- Progress tracking through todo list integration

- Server-sent events streaming for token-by-token responses

- Persistent chat sessions with context maintenance

Deployment & Requirements:

Installation requires Node.js 18+ and Electron build toolchain. Backend server runs on Express, frontend uses Vanilla CSS with Marked.js for markdown rendering. Composio API key configuration enables tool integrations.

MCP configuration writes automatically to server/opencode.json during initial request. The file contains Tool Router URL and authentication headers. Opencode reads this configuration at startup to load available tools.

Two-terminal setup needed for operation. First terminal runs backend server on port 3001. Second terminal launches Electron application. Server must start before application load.

Advantages Over Cowork:

Extensive tool integrations exceed Cowork’s connector ecosystem. Multi-provider support allows model experimentation. Open architecture permits custom tool development. Local deployment maintains data privacy.

Limitations Compared to Claude Cowork:

Two-process architecture increases setup complexity. No packaged installers for quick deployment. Tool configuration requires Composio account. MCP file management needs manual oversight if customizing beyond defaults.

Pros:

- 500+ tools through Composio integration

- Multiple LLM provider options

- Real-time progress visualization

Cons:

- Requires Composio account and API key

- Two-process architecture complicates startup

- No pre-built binaries distributed

Website / Repository: https://github.com/ComposioHQ/open-claude-cowork

5. Halo

Best for: Users requiring mobile access to desktop agent capabilities and cross-platform deployment.

Overview:

Halo wraps Claude Code CLI functionality in a visual interface spanning desktop and web platforms. The remote access architecture allows controlling desktop instances from mobile browsers, tablets, or other computers. Space-based isolation organizes projects into independent workspaces with separate files, conversations, and context.

The artifact rail displays every file generated during execution. Code previews render with syntax highlighting. HTML outputs load in embedded viewers. Image artifacts display inline. Markdown documents render with full formatting support.

AI browser integration embeds a Chromium instance under agent control. The system performs web scraping without external libraries, fills forms programmatically, executes testing workflows, and automates research tasks through browser manipulation.

Features:

- Remote access enabling control from mobile, tablet, or web browsers

- Space system for isolated project workspaces

- Artifact rail with real-time file preview across formats

- AI browser for automated web interaction

- MCP server support for Model Context Protocol extensions

- Multi-language support, including English, Chinese, Spanish

Deployment & Requirements:

Pre-built binaries support macOS Apple Silicon, macOS Intel, Windows, and Linux AppImage distributions. Web access requires enabling remote settings in the desktop application. No external server infrastructure needed for remote functionality.

Installation involves downloading platform-specific packages and running installers. Configuration accepts Anthropic, OpenAI, or DeepSeek API keys. Remote access generates authentication tokens for secure connection from external devices.

Advantages Over Cowork:

Cross-platform availability removes macOS restriction. Remote access provides mobility unavailable in desktop-only solutions. AI browser integration handles web automation natively.

Limitations Compared to Claude Cowork:

Browser implementation differs from Anthropic’s Chrome extension approach. No built-in connector ecosystem. Template system less developed than Cowork’s skill library. Self-hosted infrastructure required for team deployments.

Pros:

- Access agent from any device via web interface

- Complete cross-platform support including Linux

- AI browser embedded in application

- Dark and light themes with system awareness

Cons:

- Remote access security depends on user network configuration

- Browser automation capabilities vary by website complexity

Website / Repository: https://github.com/openkursar/hello-halo

Which One Fits Your Workflow?

Best for local workflows: Openwork (accomplish-ai) delivers the simplest local deployment with Ollama integration. No API costs, no cloud dependencies, complete local execution.

Best for autonomous task execution: Open Claude Cowork (DevAgentForge) provides the most direct Claude Agent SDK integration. Native session management and tool execution match Claude Code behavior.

Best for developers: OpenWork (different-ai) offers maximum extensibility through OpenPackage plugins and remote server deployment. Skill system enables workflow customization.

Best for non-coders: Halo removes technical barriers with one-click installers, visual artifact preview, and mobile access. AI browser handles web automation without scripting knowledge.

Best for privacy-focused users: All options support local deployment, but Openwork (accomplish-ai) with Ollama provides completely air-gapped operation. No external API calls required when using local models.

Best for tool integrations: Open Claude Cowork (Composio) dominates with 500+ authenticated services through Tool Router. Gmail, Slack, GitHub, Drive integration without custom development.

Final Thoughts

Open-source Claude Cowork alternatives address platform restrictions, subscription costs, and vendor lock-in affecting Anthropic’s implementation. You gain control over model selection, data residency, deployment infrastructure, and feature customization.

The tradeoff involves setup complexity. Pre-configured cloud services reduce friction at the cost of flexibility. Open-source tools require installation, configuration, and occasional troubleshooting in exchange for transparency and control.

You evaluating options should consider current requirements, technical expertise, budget constraints, and long-term platform strategy when selecting between proprietary and open alternatives.

FAQs

Q: What Is Claude Cowork?

A: Claude Cowork is a desktop application that functions as an autonomous agent rather than a passive chatbot. It utilizes the “Claude Code” architecture to plan complex tasks, break them down into steps, and execute them sequentially.

Q: Is Claude Cowork open source?

A: No. Claude Cowork remains proprietary software from Anthropic. The underlying Claude Code technology uses the Claude Agent SDK, which is distributed as a package but not open source. All open-source alternatives listed here provide similar functionality through reverse-engineered implementations or compatible agent frameworks.

Q: Can open-source AI agents replace Claude Cowork?

A: Open-source alternatives replicate core functionality including filesystem access, multi-step task execution, and autonomous planning. Capability gaps exist in connector ecosystems, browser automation polish, and GUI refinement. Users comfortable with technical configuration can achieve equivalent results. Non-technical users may find Cowork’s integrated experience smoother for initial adoption.

Q: Do these tools run locally?

A: All tools listed support local deployment. Openwork (accomplish-ai) with Ollama runs completely offline. Other options require API access for model inference but execute all file operations locally. Remote deployment options exist for OpenWork (different-ai) when team collaboration needs justify server infrastructure.

Q: Do they require paid APIs?

A: Model access determines costs. Ollama integration in Openwork (accomplish-ai) eliminates API expenses. Claude Agent SDK implementations require Anthropic API keys with per-token billing. Multi-provider tools accept OpenAI, Google, or xAI keys based on user preference. Total costs depend on usage volume and selected model tiers.

Q: Are open-source AI agents safe to use?

A: Safety depends on deployment practices. All listed tools execute code with filesystem access, creating potential for unintended modifications. Permission systems mitigate risks by requiring approval for destructive operations. You should review tool outputs before approval, limit workspace access to non-critical directories, and maintain backups of important files. Open source code allows security audits unavailable with proprietary software.

Related Resources

- 7 Best CLI AI Coding Agents (Open Source)

- AI Coding Agents: Discover more open-source command-line AI coding agents.

- Free AI Tools for Developers: Discover the best free AI tools for developers.

- Vibe Coding: Discover free & open-source vibe coding AI tools.