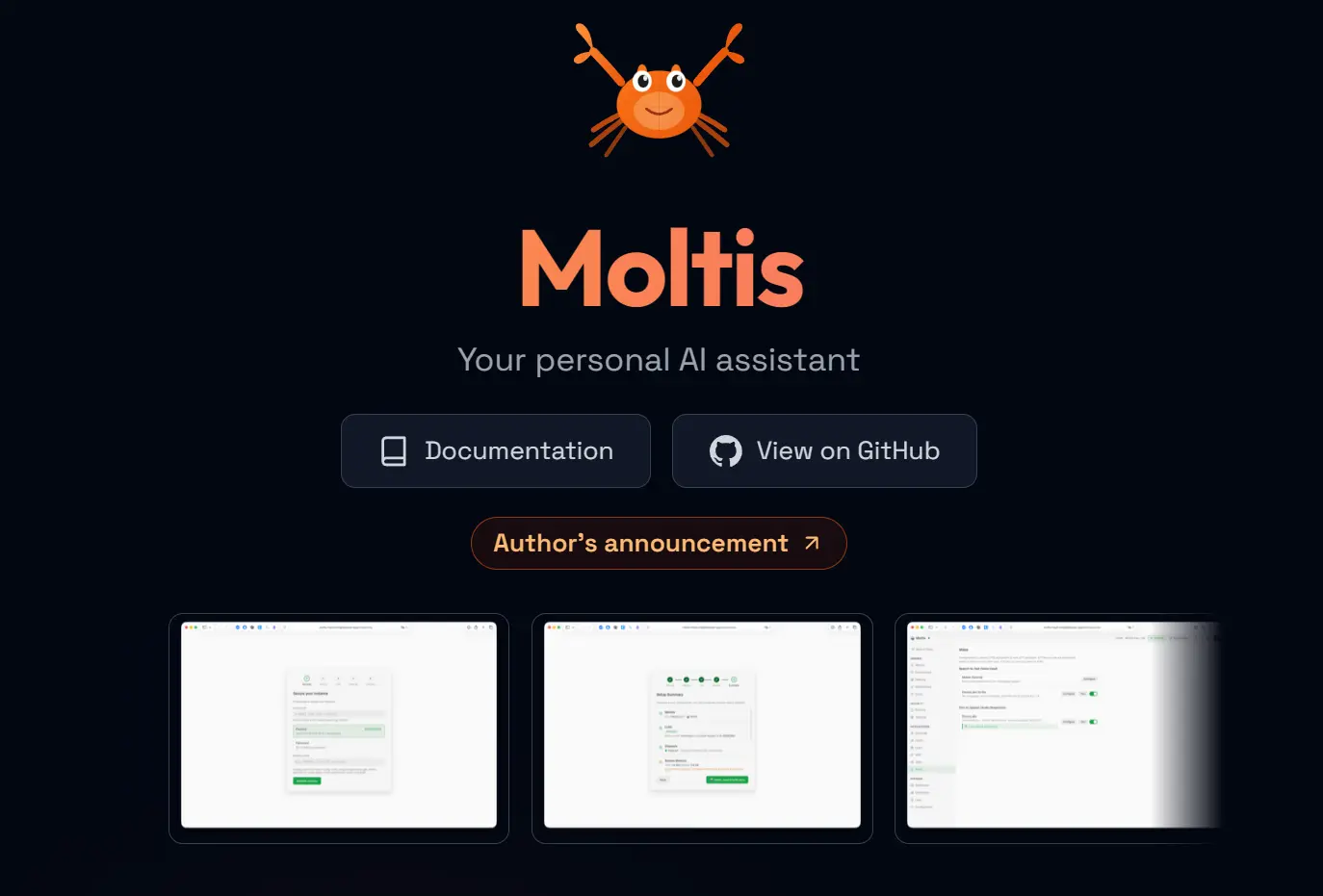

Moltis is an open-source, personal AI assistant written in Rust that was designed as an alternative to the popular OpenClaw project.

It compiles into a single binary without requiring external runtimes like Node.js or Python.

You can run it on your own hardware to manage AI interactions, execute tools, and maintain long-term memory.

Features

- Multi-provider LLM support: Works with OpenAI Codex, GitHub Copilot, and local LLMs.

- Streaming responses: Token streaming happens in real time, even when tools are enabled. Tool call arguments stream as deltas as they arrive.

- Communication channels: Telegram integration ships now, with an extensible channel abstraction for adding others.

- Web gateway: HTTP and WebSocket server with a built-in web UI.

- Session persistence: SQLite-backed conversation history with per-session run serialization to prevent corruption.

- Agent-level timeout: Configurable wall-clock timeout (default 600 seconds) prevents runaway executions.

- Sub-agent delegation: The

spawn_agenttool lets the LLM delegate tasks to child agent loops with nesting depth limits and tool filtering. - Message queue modes: Choose

followup(replay each queued message as a separate run) orcollect(concatenate and send once) for messages arriving during an active run. - Tool result sanitization: Strips base64 data URIs and long hex blobs, truncates oversized results before feeding back to the LLM (configurable limit, default 50 KB).

- Memory and knowledge base: Embeddings-powered long-term memory with hybrid vector + keyword search.

- Skills: Extensible skill system with support for existing skill repositories.

- Hook system: Lifecycle hooks with priority ordering, parallel dispatch for read-only events, circuit breaker, dry-run mode, and HOOK.md-based discovery. Bundled hooks include boot-md, session-memory, and command-logger.

- Web browsing: Web search (Brave, Perplexity) and URL fetching with readability extraction and SSRF protection.

- Voice support: Text-to-speech and speech-to-text with multiple cloud and local providers. Configure from the Settings UI.

- Scheduled tasks: Cron-based task execution built in.

- OAuth flows: Built-in OAuth2 for provider authentication.

- TLS support: Automatic self-signed certificate generation on first run.

- Observability: OpenTelemetry tracing with OTLP export and Prometheus metrics.

- MCP support: Connect to MCP servers over stdio or HTTP/SSE, with health polling and automatic restart on crash.

- Parallel tool execution: Multiple tool calls in one turn run concurrently via

futures::join_all. - Sandboxed execution: Docker, Podman, and Apple Container backends with pre-built images, configurable packages, and per-session isolation.

- Authentication: Password, passkey (WebAuthn), and API key support with first-run setup code flow.

- Endpoint throttling: Per-IP request throttling for unauthenticated traffic with strict limits on login attempts.

- WebSocket security: Origin validation prevents Cross-Site WebSocket Hijacking.

- Onboarding wizard: Guided setup for agent identity—name, emoji, creature, vibe, soul—and user profile.

- Config validation:

moltis config checkvalidates configuration files, detects misspelled fields with suggestions, and reports security warnings. - Zero-config startup:

moltisruns the gateway by default. No subcommand needed. - Tailscale integration: Expose the gateway over your tailnet via Tailscale Serve or Funnel, with status monitoring and mode switching from the web UI.

Use Cases

- Personal automation that respects privacy: Run scheduled tasks like daily research briefings or inbox summaries. Moltis processes everything locally and only contacts LLM providers with the specific queries you authorize.

- Development assistant with sandboxed execution: Give the agent access to your codebase and let it run tests, check for issues, or generate boilerplate. Every command executes in an isolated container, so you don’t have to worry about a hallucinated script wiping your home directory.

- Multi-channel personal assistant: Configure Moltis to be available on Telegram while maintaining coherent context across conversations. You can message it from your phone, and it remembers what you discussed in the web UI yesterday. The session persistence handles this automatically.

- Agent workflows: Use sub-agent delegation to build multi-step pipelines. One agent handles research, another writes code, a third reviews it. Moltis manages the orchestration and passes context between them .

Installation

Option A: One-Liner (macOS / Linux)

curl -fsSL https://www.moltis.org/install.sh | shOption B: Homebrew

brew install moltis-org/tap/moltisOption C: Docker Image

docker pull ghcr.io/moltis-org/moltis:latestOption D: Build from Source (Rust)

git clone https://github.com/moltis-org/moltis.git

cd moltis

cargo build --releaseRunning the Gateway

Standard Local Run

If you installed the binary directly, start the gateway. It will automatically detect your Docker socket if it is in the standard location.

# Starts the gateway (default command)

moltis gatewayRunning via Docker (Sandboxing Setup)

If you run Moltis itself in a container, you must mount the host’s Docker socket. This allows Moltis to spawn sibling containers for its sandboxed tools.

Docker / OrbStack:

docker run -d \

--name moltis \

-p 13131:13131 \

-p 13132:13132 \

-v moltis-config:/home/moltis/.config/moltis \

-v moltis-data:/home/moltis/.moltis \

-v /var/run/docker.sock:/var/run/docker.sock \

ghcr.io/moltis-org/moltis:latestPodman (Rootless):

podman run -d \

--name moltis \

-p 13131:13131 \

-p 13132:13132 \

-v moltis-config:/home/moltis/.config/moltis \

-v moltis-data:/home/moltis/.moltis \

-v /run/user/$(id -u)/podman/podman.sock:/var/run/docker.sock \

ghcr.io/moltis-org/moltis:latestPodman (Rootful):

podman run -d \

--name moltis \

-p 13131:13131 \

-p 13132:13132 \

-v moltis-config:/home/moltis/.config/moltis \

-v moltis-data:/home/moltis/.moltis \

-v /run/podman/podman.sock:/var/run/docker.sock \

ghcr.io/moltis-org/moltis:latestFirst-Run Configuration

- Access the UI: Open

http://localhost:3000(orhttp://localhost:13131if using Docker). - Authentication: The terminal logs will display a Setup Code. Enter this code in the Web UI to set your admin password or register a WebAuthn passkey.

- TLS Certificates: Moltis generates a self-signed certificate. To stop browser warnings, download the CA certificate from

http://localhost:13132/certs/ca.pemand add it to your system’s trust store (Keychain on macOS,update-ca-certificateson Linux). - Providers: Edit the generated

moltis.tomlfile (located in~/.config/moltis/or the mounted volume) to add your API keys for OpenAI, Anthropic, or other providers.

Control Reference

CLI Commands

| Command | Subcommand | Description |

|---|---|---|

moltis | gateway | Starts the gateway server (default if no subcommand is given). |

moltis | config check | Validates moltis.toml, detects typos, and reports security warnings. |

moltis | hooks list | Lists all discovered hooks from HOOK.md files. |

moltis | hooks list --eligible | Lists only hooks that meet current system requirements. |

moltis | hooks list --json | Outputs the hook list in JSON format. |

moltis | hooks info <name> | Shows detailed metadata for a specific hook. |

moltis | sandbox list | Lists pre-built sandbox Docker images. |

moltis | sandbox build | Manually triggers a build of the sandbox image based on config. |

moltis | sandbox clean | Removes all pre-built sandbox images to free space. |

moltis | sandbox remove <tag> | Removes a specific sandbox image tag. |

Hook Events

You can trigger scripts on these specific lifecycle events. Define them in a HOOK.md file.

| Event | Trigger Point |

|---|---|

BeforeToolCall | Fires before the agent executes a tool. (Can modify/block). |

AfterToolCall | Fires after a tool execution completes. |

BeforeAgentStart | Fires when an agent loop begins. |

AgentEnd | Fires when an agent loop finishes. |

MessageReceived | Fires when the gateway receives a user message. |

MessageSending | Fires before the gateway sends a message to the LLM. |

MessageSent | Fires after the message is successfully sent. |

BeforeCompaction | Fires before session history is summarized/compacted. |

AfterCompaction | Fires after session compaction is complete. |

ToolResultPersist | Fires when a tool result is saved to history. |

SessionStart | Fires when a new conversation session begins. |

SessionEnd | Fires when a session is closed. |

GatewayStart | Fires when the Moltis server process starts. |

GatewayStop | Fires when the Moltis server shuts down. |

Command | Fires on specific user commands. |

Key Configuration Settings (moltis.toml)

| Section | Setting | Description |

|---|---|---|

[agent] | timeout | Hard wall-clock limit for agent runs (default: 600s). |

[tools.exec.sandbox] | packages | List of system packages (apt) to install in the sandbox image. |

[memory] | backend | Storage backend (e.g., "builtin"). |

[memory] | citations | Controls citation behavior ("auto", "on", "off"). |

[memory] | llm_reranking | Boolean to enable/disable LLM-based result reranking. |

[memory] | session_export | Boolean to enable exporting sessions to Markdown memory. |

[hooks] | events | Array of strings defining which events a hook listens to. |

[hooks] | timeout | Execution time limit for the hook script. |

Pros

- Single Binary: One 60MB file contains the entire system including web UI .

- Security: Sandboxed execution, SSRF protection, WebSocket origin validation, and configurable tool result sanitization.

- **Multi-provider **: Use OpenAI, local GGUF models, or anything in between through the same interface.

- Observability: OpenTelemetry and Prometheus built in. You can trace agent runs end-to-end.

Cons

- Learning curve: You manage your own infrastructure, LLM API keys, and configuration.

- API costs add up: You still pay for LLM provider APIs.

- Limited channel support: Only Telegram and web UI ship now. Discord and others are planned but not yet available.

Related Resources

- GitHub Repository: Access the source code, release notes, and issue tracker.

- Official Documentation: Detailed guides on configuration, cloud deployment, and hook development.

- OpenClaw: The most popular AI assistant that inspired Moltis.

FAQs

Q: How does Moltis prevent the AI from deleting my files?

A: Moltis uses sandboxing. When the AI runs a shell command, it executes inside a Docker container. If the AI runs rm -rf /, it only deletes the files inside that temporary container.

Q: Can I run Moltis on a server instead of my laptop?

A: Yes. Moltis includes one-click deploy configurations for Fly.io, DigitalOcean, and Render. You can use the --no-tls flag if your cloud provider handles SSL termination.

Q: Does Moltis support local LLMs offline?

A: Yes. You can configure Moltis to use local GGUF models directly or connect to a local inference server like Ollama. The system also supports local embeddings for offline memory features.

Q: What is the “Setup Code” mentioned in the logs?

A: On the very first run, Moltis generates a one-time code printed to your terminal. You must enter this code in the Web UI to prove you have physical access to the server. This allows you to set your initial password or passkey securely.

Q: How do I add new tools to the agent?

A: Moltis supports the Model Context Protocol (MCP). You can add external MCP servers by editing the mcp-servers.json file or configuring them in the UI. The agent also “self-extends” by creating skills at runtime if enabled.