The market for AI content detectors is growing crowded, with hundreds of tools promising to distinguish machine-generated text from human writing. But in a world where AI can write, rewrite, and “humanize” content, which of these detectors can you actually trust? The answer is critical for educators, developers, and publishers who rely on content authenticity.

To find out, I moved beyond marketing claims and ran a practical, data-driven test. I selected seven of the most popular free AI content detectors available today and evaluated their performance on two distinct text samples: one article generated by AI and then “humanized” to evade detection, and another written by a professional journalist for the BBC.

I recorded the exact accuracy scores for each tool on both AI and human text, calculated their average performance, and ranked them accordingly. Here’s what I found.

Are you looking for Free AI Humanizers to bypass AI content detectors? Check out our Free AI Rewriting Tools.

TL;DR

| Rank | Tool | Average Score |

|---|---|---|

| 1 | Originality.ai | 97.5 |

| 2 | GPTZero | 94.0 |

| 3 | undetectable.ai | 59.0 |

| 4 | StealthWriter | 57.5 |

| 5 | QuillBot AI Detector | 56.5 |

| 6 | GPTinf | 50.5 |

| 7 | ZeroGPT | 50.14 |

How I Tested Each Detector

My testing process was designed to be methodical and fair, ensuring that each tool was evaluated on the same basis. The test was conducted in November 2025 using the free web versions of all seven detectors.

Here is the step-by-step process I followed:

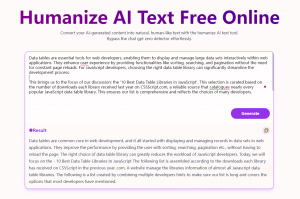

Text Sample Collection: I prepared two distinct articles. The first was an AI-generated text on a technical topic, which I then processed through an AI humanizer tool. This created a sample designed to be difficult to detect. The second was a feature article from the BBC, chosen for its professional quality and complex, human-like sentence structures.

Tool Interface: For each of the seven detectors, I used their publicly available, free web interface. I did not use any paid plans, APIs, or premium features, as the objective was to assess what is available to the average user at no cost.

Data Recording: I pasted each of the two text samples into every detector. Each tool returned a score, typically expressed as a percentage, indicating the likelihood that the text was AI-generated or human-written. I recorded these percentages precisely as they were reported. “AI Accuracy” was the tool’s AI score for the AI-humanized text. “Human Accuracy” was the tool’s human score for the BBC text.

Average Score Calculation: To determine an overall performance rank, I calculated a simple average score for each tool: (AI Accuracy % + Human Accuracy %) / 2. This method gives equal weight to both catching AI and correctly identifying human writing, penalizing tools that excel at one task at the expense of the other.

Full Test Results

The complete dataset reveals significant variation in detector performance. I have organized the results from highest to lowest average accuracy in the table below:

| Rank | Tool | AI Accuracy (%) | Human Accuracy (%) | Average Score | Verdict |

|---|---|---|---|---|---|

| 1 | Originality AI | 100 | 95 | 97.5 | Best Overall Accuracy |

| 2 | GPTZero | 100 | 88 | 94.0 | High AI Detection, Some False Positives |

| 3 | Undetectable AI | 47 | 71 | 59.0 | Moderate Balance |

| 4 | StealthWriter | 53 | 62 | 57.5 | Slightly Aggressive |

| 5 | QuillBot AI Detector | 13 | 100 | 56.5 | Conservative & Human-Friendly |

| 6 | GPTinf | 1 | 100 | 50.5 | Perfect Human Recognition, Misses AI |

| 7 | ZeroGPT | 5.67 | 94.61 | 50.14 | Safe but Low AI Sensitivity |

Insights From the Data

The gap between first and third place is substantial. Originality.ai scored 97.5 percent average accuracy while undetectable.ai managed only 59 percent. Only the top two tools exceeded 90 percent average accuracy.

The middle tier, consisting of undetectable.ai and StealthWriter, showed partial accuracy on both tests but nothing exceptional.

The bottom three tools performed well on human text but failed almost completely to identify the AI-humanized article.

None of the seven tools perfectly identified both samples, although Originality.ai came close.

Tool-by-Tool Reviews

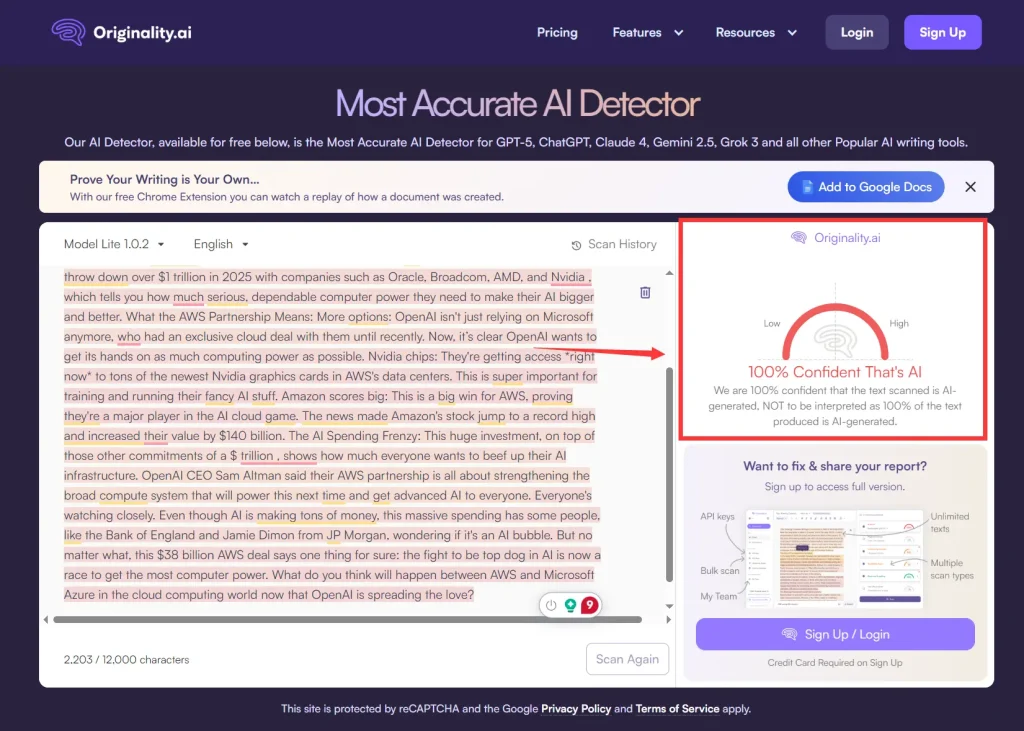

Originality AI

AI Accuracy: 100% | Human Accuracy: 95% | Average: 97.5

Originality.ai delivered the most accurate performance in this test. It correctly identified the entire AI-humanized article as machine-generated while recognizing 95 percent of the BBC article as human-written.

Strengths: Perfect detection of AI content, even after humanization processing. Minimal false positives on genuine human writing. Clear percentage-based results that are easy to interpret.

Weaknesses: Flagged a small portion of professional journalism as potentially AI-generated. The free version has usage limits. The interface requires email registration for access.

Best For: Educators verifying student submissions, editors checking freelance work, and anyone who needs reliable AI detection with minimal false negatives.

Originality.ai proved to be the most dependable free AI content detector I tested, balancing strong AI detection with reasonable accuracy on human text.

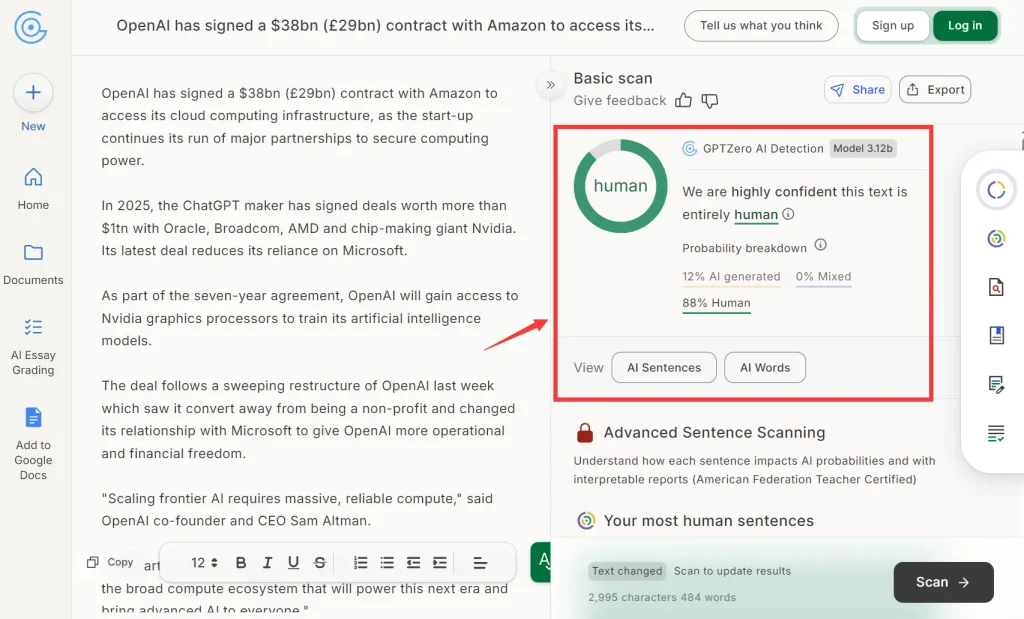

GPTZero

AI Accuracy: 100% | Human Accuracy: 88% | Average: 94.0

GPTZero achieved perfect accuracy in identifying AI content and ranked second overall. It successfully flagged the humanized AI article while correctly identifying most of the BBC article as human-written.

Strengths: Excellent AI detection capability that caught 100 percent of machine-generated content. Provides detailed sentence-by-sentence analysis showing which portions appear AI-generated. No registration required for basic scanning.

Weaknesses: Flagged 12 percent of the BBC article as AI-generated, showing higher false positive rates than Originality.ai. The detailed analysis can be overwhelming for quick checks.

Best For: Academic institutions conducting plagiarism reviews, content teams auditing large volumes of submissions, and users who want granular analysis of suspicious passages.

GPTZero offers strong detection capabilities with detailed reporting, though users should expect occasional false positives on human text.

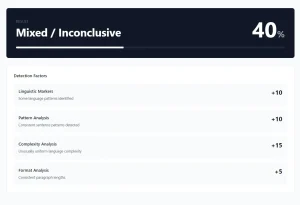

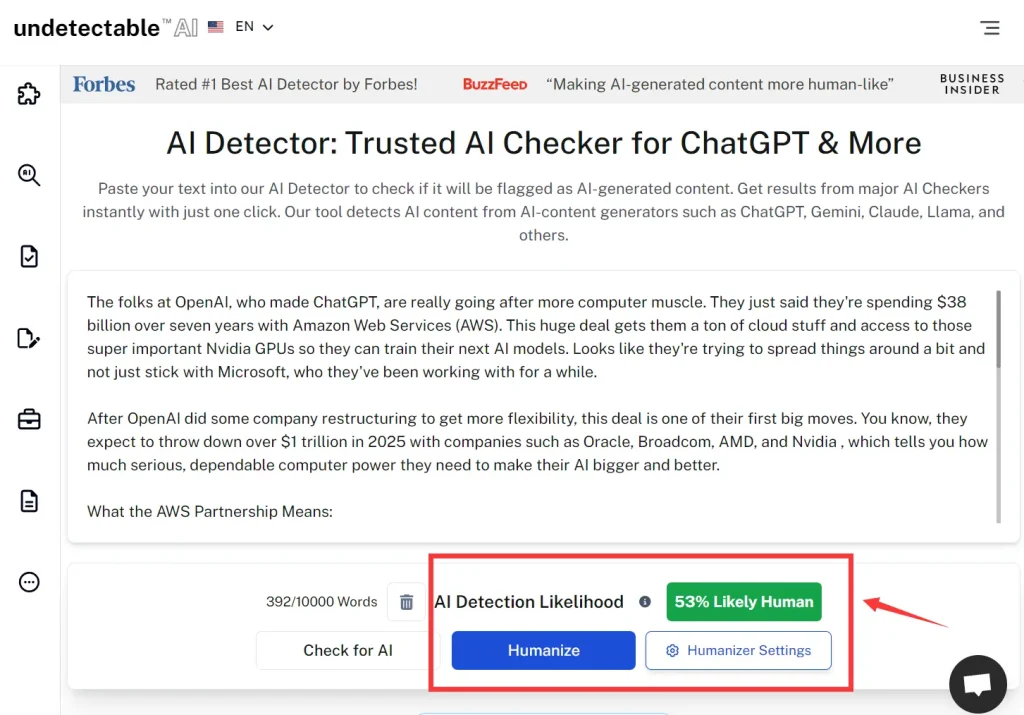

Undetectable AI

AI Accuracy: 47% | Human Accuracy: 71% | Average: 59.0

The tool called undetectable.ai showed moderate performance on both test articles. It identified less than half of the AI content while correctly recognizing roughly two-thirds of human writing.

Strengths: Free access without registration requirements. Provides both detection and humanization services in one platform. The interface loads quickly and displays results immediately.

Weaknesses: Missed more than half of the AI-humanized article. Flagged nearly 30 percent of genuine BBC journalism as potentially machine-generated. Results appear less reliable than top performers.

Best For: Casual users wanting a quick general impression of content origin without requiring definitive answers. Writers curious about how their work might be perceived by AI detectors.

The moderate accuracy of undetectable.ai makes it suitable only for low-stakes situations where approximate guidance suffices.

StealthWriter

AI Accuracy: 53% | Human Accuracy: 62% | Average: 57.5

StealthWriter performed slightly below undetectable.ai with 57.5 percent average accuracy. It caught just over half of AI content while correctly identifying 62 percent of human text.

Strengths: Simple interface with straightforward results. Offers both detection and rewriting features. Processes text quickly without requiring account creation.

Weaknesses: Failed to identify 47 percent of the AI-humanized article. Incorrectly flagged 38 percent of the BBC article as AI-generated. The slightly aggressive approach creates notable false positive risks.

Best For: Users conducting preliminary content checks before using more accurate tools. Individuals who need occasional AI detection combined with text rewriting capabilities.

StealthWriter’s middling performance suggests it works better as a supplementary tool rather than a primary detection method.

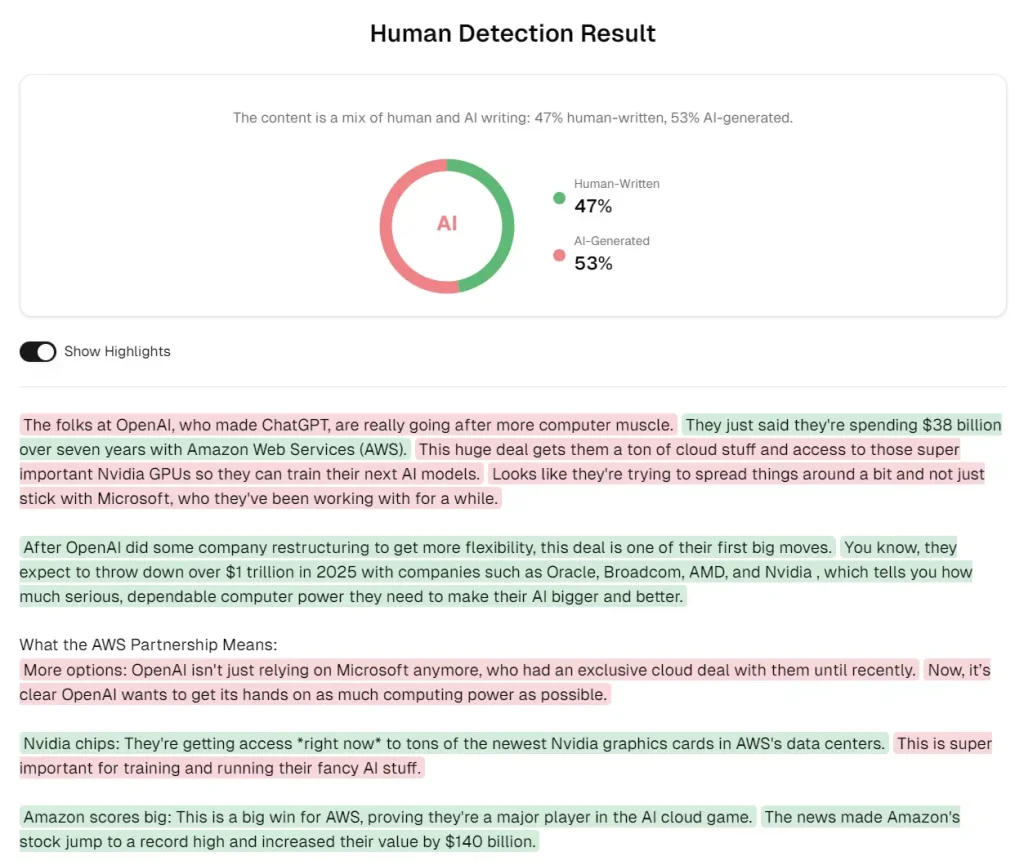

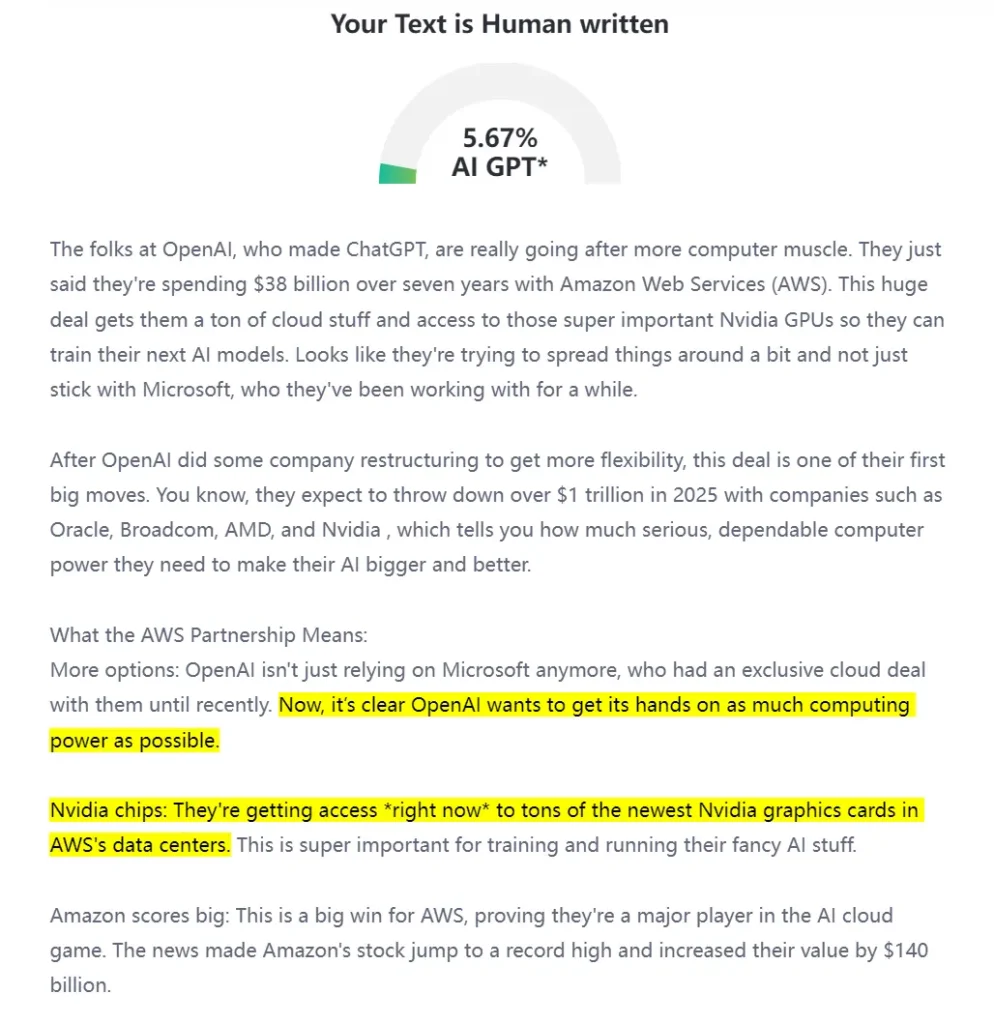

QuillBot AI Detector

AI Accuracy: 13% | Human Accuracy: 100% | Average: 56.5

QuillBot AI Detector takes an extremely conservative approach to detection. It perfectly identified human writing but caught only 13 percent of AI content.

Strengths: Zero false positives on genuine human writing. Never wrongly accused the BBC journalist of using AI. Easy to use with a clean interface design.

Weaknesses: Missed 87 percent of the AI-humanized article. The low sensitivity makes it nearly useless for detecting sophisticated AI text. Conservative settings provide false confidence about content authenticity.

Best For: Writers and editors who want to verify that their human-written work will not trigger false AI accusations. Situations where avoiding false positives matters more than catching AI content.

QuillBot protects human authors from wrongful accusations but offers minimal actual AI detection capability.

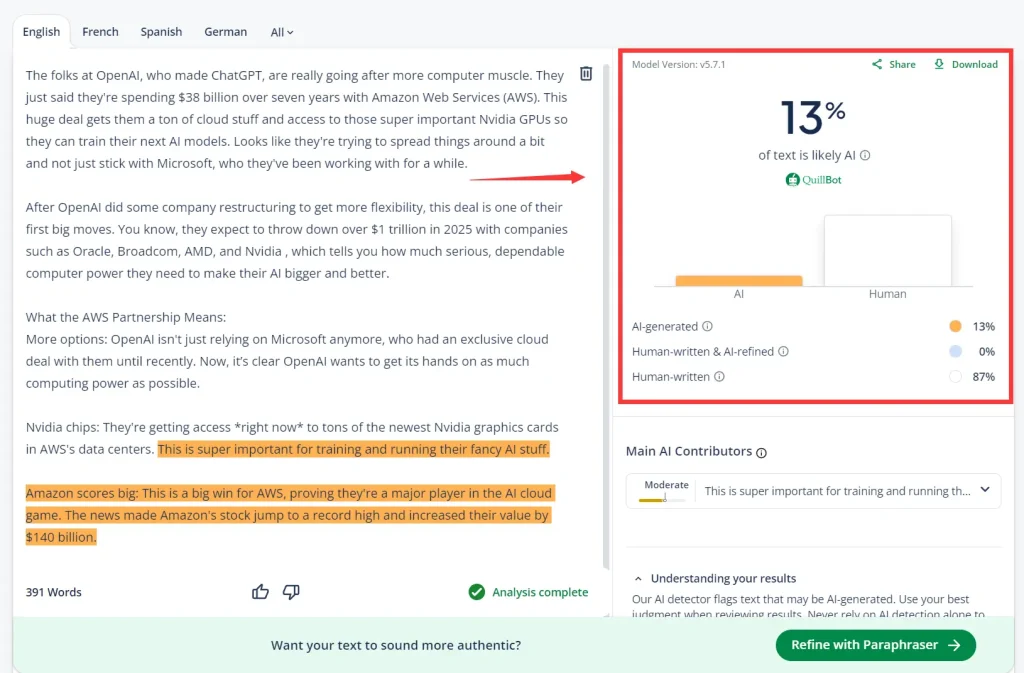

GPTinf

AI Accuracy: 1% | Human Accuracy: 100% | Average: 50.5

GPTinf demonstrated perfect human recognition but virtually no AI detection capability. It identified only one percent of the AI-humanized article as machine-generated.

Strengths: Perfect accuracy on human-written content with zero false accusations. Completely safe for professional writers worried about false positives. Simple results display.

Weaknesses: Failed to detect 99 percent of AI content in the test. Essentially non-functional as an AI detector for humanized text. The extreme conservatism makes it unreliable for verification purposes.

Best For: Professional writers who want assurance their work will not be flagged as AI. Situations where the primary concern is protecting human authors rather than detecting AI.

GPTinf functions more as a human-writing validator than an actual AI content detector.

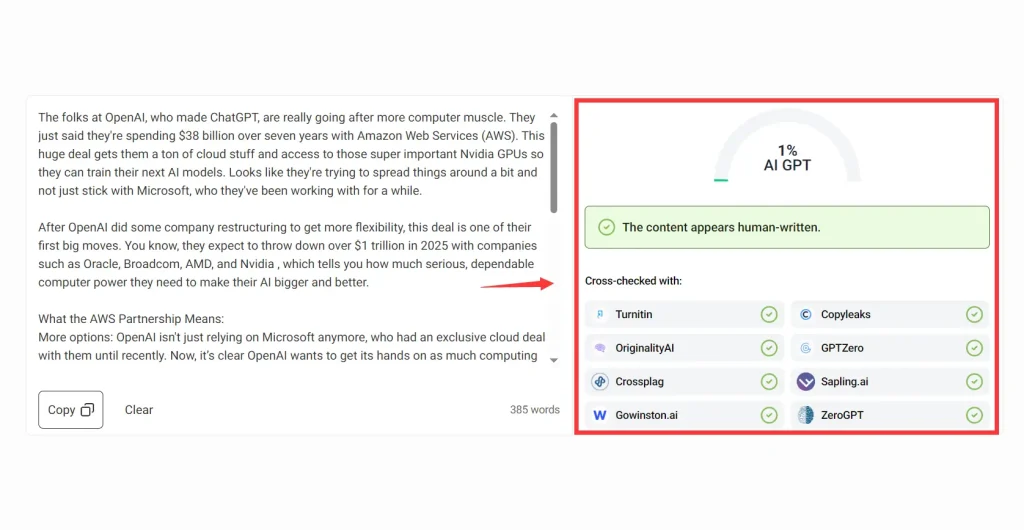

ZeroGPT

AI Accuracy: 5.67% | Human Accuracy: 94.61% | Average: 50.14

ZeroGPT ranked last in overall accuracy with barely above 50 percent average performance. It recognized most human writing but detected almost none of the AI content.

Strengths: Strong human recognition at 94.61 percent. Low false positive rate protects genuine authors. Free access with unlimited scans.

Weaknesses: Identified less than six percent of the AI-humanized article. The low AI sensitivity makes it ineffective against sophisticated humanization tools. Results provide false security about content authenticity.

Best For: Quick checks on obviously AI-generated content with no humanization processing. Users who prioritize avoiding false accusations over reliable AI detection.

ZeroGPT performs safely on human text but offers minimal protection against AI content designed to evade detection.

Key Patterns & Takeaways

This testing revealed several important patterns about the current state of AI content detection. No free tool achieves perfect accuracy across both AI and human content. Even the best performer, Originality.ai, showed minor classification errors on professional journalism. This reflects the fundamental difficulty of distinguishing sophisticated AI text from human writing.

Humanized AI text can fool most detectors. Five of the seven tools identified less than 55 percent of the AI-humanized article correctly. This demonstrates that commercially available humanization services work as advertised, at least against most free detection tools. Only Originality.ai and GPTZero proved resistant to humanization techniques.

Some tools overreact and flag genuine writing as suspicious. GPTZero marked 12 percent of BBC journalism as potentially AI-generated. StealthWriter flagged 38 percent of the same article. These false positives create real problems when teachers or editors use these tools to judge human work.

The results confirm that AI detection remains probabilistic rather than definitive. These tools analyze statistical patterns in text, comparing them against training data about AI and human writing styles. No detector can prove with absolute certainty whether text originated from a human or machine. The variation in results across tools, even when analyzing identical content, demonstrates this uncertainty. Combining two detectors, one strict, one forgiving, is often the most reliable method.

Limitations and Next Steps

It is important to acknowledge the limitations of this test. The findings are based on the analysis of only two articles. While carefully chosen to represent challenging use cases, a larger and more diverse dataset, including different writing styles, topics, and AI models, would provide a more comprehensive view of each tool’s capabilities.

Furthermore, this test was restricted to the free web versions of these detectors. Many of these services offer paid tiers with claims of higher accuracy, advanced features, and API access. These premium versions were not evaluated and may perform differently.

The results presented here are a snapshot in time, reflecting the state of these tools as of November 2025. The field of AI detection is evolving rapidly, and the performance of these tools may change as their underlying models are updated.

Coming Next: 7 Best Free AI Humanizers

This article examined detectors, but the other side of this technological arms race is equally important. How effective are the humanization tools that claim to make AI text undetectable? Which services actually work, and which ones waste your time?

My next article will test ten popular free AI humanizers using the same data-driven approach. I will generate AI content, process it through each humanizer, then run the results through multiple detectors to measure effectiveness. You will see exactly which tools successfully evade detection and which ones fail.

Here’s the result: I Tested 10 Free AI Humanizers: All Failed GPTZero Detection

FAQs

Q: Are longer articles easier to detect?

A: Generally, longer texts can be easier for detectors to analyze because they provide more data and patterns. However, effectiveness still depends heavily on the sophistication of the AI writing style and the sensitivity of the detection tool. A well-humanized long article can still evade detection.

Q: Should I rely on one detector?

A: No, relying on a single detector is not recommended, especially for important decisions. The data shows that performance varies widely. For a more accurate assessment, it is best to use at least two tools—ideally one with high sensitivity (like Originality.ai) and one with a low false positive rate (like QuillBot).

Q: Can AI-humanized text fool detectors?

Yes, humanization tools successfully fooled five of the seven detectors tested. Only Originality.ai and GPTZero correctly identified the humanized AI article, while the other tools missed between 47 and 99 percent of AI content.

Related Resources

- 10 Best Free AI Writing Tools

- 10 Best AI Image Generators

- Discover More Free AI Writing & Text Generation Tools

Changelog:

11/06/2025

- Update screenshots

11/05/2025

- Re-ranked those AI tools based on my real-world testing.

06/19/2024

- Replaced Corrector’s AI Content Detector with Free AI Detector

12/22/2023

- Removed AI Text Classifier since it has been deprecated by OpenAI.