Stop context-switching between your IDE and ChatGPT. In 2026, the best AI coding agents are native to your terminal.

We are witnessing the shift from simple ‘code completion’ to fully agentic workflows. These CLI AI coding agents can plan, write, debug code, and even deploy apps with minimal oversight. They turn a vague natural language prompt into a working feature across multiple files.

However, not all coding agents are created equal. We filtered through the noise, analyzing GitHub activity and real-world performance, to identify the 7 CLIs worth trying today. Here are the best CLI AI coding agents to supercharge your workflow in 2026.

TL;DR – Quick Picks

| Toolname | Best For | 3rd-Party LLM? |

|---|---|---|

| Claude Code | Handling large codebases with long context | ✅ |

| Codex | Integration with GitHub and team workflows | ❌ |

| Gemini CLI | All-around terminal-based AI assistance | ❌ |

| Opencode | A provider-agnostic, terminal-first experience | ✅ |

| Qwen Code | Multimodal analysis with vision model support | ✅ |

| Plandex | Complex, long-running coding tasks | ✅ |

| Crush | A highly customizable and visually appealing terminal experience | ✅ |

What Are Command-line AI Coding Agents?

Most developers are already familiar with AI assistants inside editors or browsers. CLI coding agents take that same intelligence and bring it straight to the terminal.

Instead of typing isolated commands, you can ask these agents to understand your project, plan tasks, and write or refactor code without ever leaving the shell. They use your local environment, access real files, and interact directly with Git, Docker, or any build tool you rely on.

A good CLI coding agent acts like an extra developer who works quietly beside you. It can generate functions, fix broken code, explain logic, or even manage multiple repositories. The key difference is that everything happens within your control. No data is sent to closed systems unless you choose to.

In short, CLI coding agents merge the power of large language models with the practicality of command-line workflows. They’re built for developers who prefer precision, transparency, and the speed of working without a graphical interface.

Table Of Contents

How We Ranked These AI Coding Agents

With so many open-source CLI AI coding agents released in the past year, we tested and ranked them using four main criteria:

- GitHub activity – Frequency of commits, open issues, and community involvement.

- Ease of setup – Installation time, dependencies, and documentation clarity.

- Agent capability – Ability to handle multi-file edits, context awareness, and reliability.

- Community trust – Response time, version stability, and user adoption.

Each agent included here is open source, actively maintained, and usable directly from your terminal. Our focus was on real-world usability, not just raw model performance.

If you’re interested in exploring similar projects, check out our AI Coding Agents category for more open-source developer tools.

The 7 Best AI Coding Agents on GitHub

Here are our top picks for the open-source AI coding agents you should be watching and using in 2026.

Claude Code

GitHub Stars: ~40,800

Claude Code is an AI-powered agentic coding tool that runs in the terminal to help developers accelerate workflows by automating tasks like writing code, debugging, and handling Git commands.

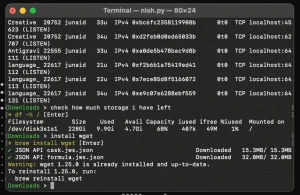

Install & Usage:

npm install -g @anthropic-ai/claude-code

# Navigate to your project directory and run:

claude

Key Features:

- Natural language commands: Interact with the tool using simple commands to perform complex actions.

- Codebase understanding: Explains the architecture and logic of a project in natural language.

- Automated tasks: Executes routine tasks like testing, linting, and fixing bugs automatically.

- Git integration: Simplifies Git operations, such as creating commits, PRs, and resolving conflicts.

- Workflow automation: Automates entire workflows from ticket assignment to deployment.

- Claude Skills: A feature that allows users to teach the AI custom workflows, which it can then automate in the future.

- VS Code Extension: Offers a visual interface through an extension for users who find the terminal overwhelming.

- Security: Operates within the terminal, connecting directly to the API for a more secure workflow.

- Data Privacy: Implements safeguards to protect user data, including limited retention periods for sensitive information and clear policies against using feedback for model training.

Pros:

- Industry-leading performance on many coding benchmarks.

- Ideal for developers who need an agent with a deep understanding of their code.

Cons:

- Not a standalone open-source project, requiring integration with other tools.

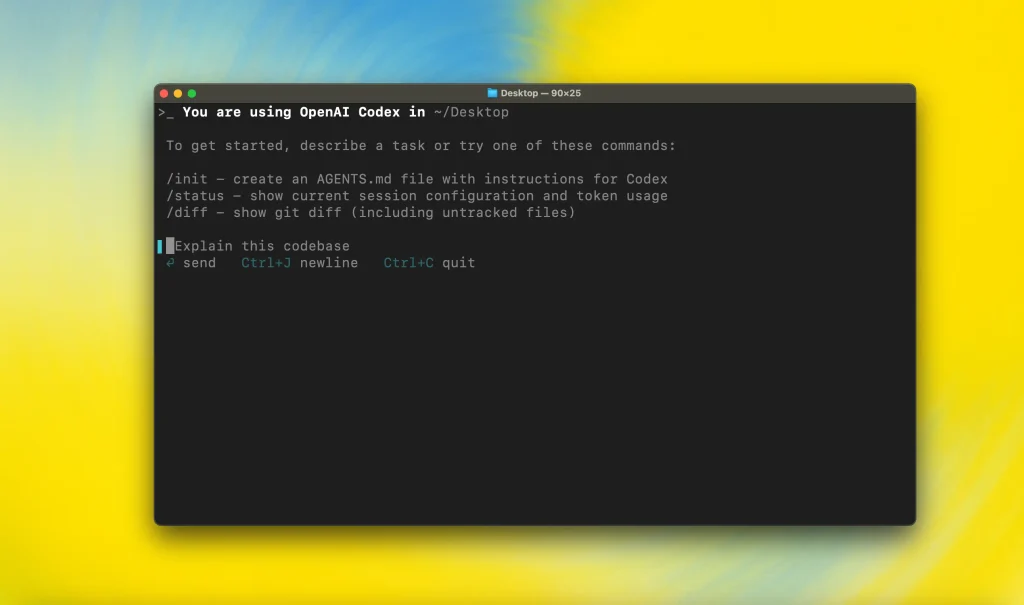

OpenAI CodeX

GitHub Stars: 49,300+

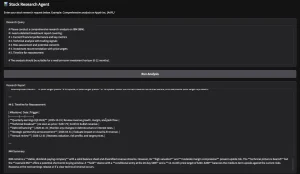

OpenAI Codex is an AI agent that can autonomously write, test, and debug code by integrating with a developer’s existing tools and workflows.

Install & Usage:

npm i -g @openai/codex

# Navigate to your project directory and run:

codex

Key Features:

- Code generation: Quickly write functions, scripts, and modules.

- Bug fixing: Automate the identification and resolution of code errors.

- Testing and debugging: Run tests, linters, and other checks to ensure code quality.

- Code refactoring: Simplify and optimize existing code for better performance and readability.

- Integration: Plugs into developer workflows via tools like Slack and IDE extensions for VS Code.

- Full repository context: Clones a repository into a secure sandbox to understand the entire codebase and dependencies.

- Command execution: Can run commands, including tests and linters, to validate its own work.

- GitHub integration: Can automatically review pull requests and create new ones.

Pros

- Increases productivity: Speeds up development by automating repetitive and time-consuming tasks like writing boilerplate code.

- Reduces context switching: Allows developers to stay focused by offloading background work and handling tasks like bug fixes and documentation drafting.

- Streamlines workflow: Turns conversational requests (e.g., in Slack) directly into code changes with pull requests.

- Validates its own work: Uses built-in testing and command execution to check for issues in the code it produces.

Cons

- Limited by complexity: Can still struggle with highly complex, multi-step problems.

- Needs oversight: Not a complete replacement for human developers; its code requires human review to ensure quality and catch subtle errors.

- Potential for poor quality: Code quality can sometimes be inconsistent, and it may have issues with error handling.

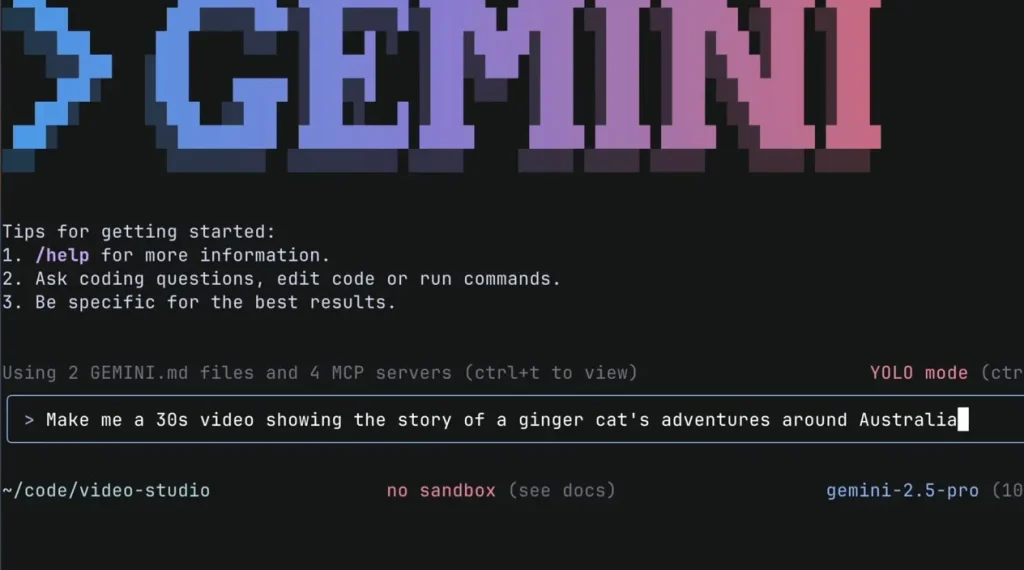

Gemini CLI

GitHub Stars: 81,000+

Gemini CLI is an open-source tool that brings the power of Google’s Gemini models directly into your terminal.

Install & Usage:

npm install -g @google/gemini-cli

# Navigate to your project directory and run:

gemini

Read More: Open-source Alternative to Claude Code – Google Gemini CLI

Key Features:

- Large Codebase Analysis: Query and edit codebases that exceed Gemini’s 1M token context window, handling enterprise-scale projects with ease.

- Multimodal App Generation: Generate new applications from PDFs or sketches using Gemini’s advanced multimodal capabilities.

- Automated Operational Tasks: Handle complex operations like querying pull requests, managing git rebases, and analyzing commit histories.

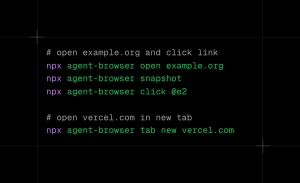

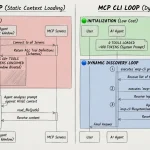

- MCP Server Integration: Connect with Model Context Protocol servers and extensions, including media generation tools like Imagen, Veo, and Lyria.

- Built-in Google Search: Ground your queries with real-time web search capabilities integrated directly into the AI responses.

- System Tool Integration: Work with local file systems, manipulate images, organize documents, and perform system-level operations through natural language commands.

- Customizable Prompts: Tailor AI instructions and system prompts to match your specific workflow requirements.

- Non-interactive Automation: Invoke Gemini CLI within scripts for automated task execution and workflow integration.

Pros:

- Exceptional Usage Limits: The free tier offers 60 model requests per minute and 1,000 requests per day, providing the industry’s most generous allowance for individual developers without requiring payment.

- Native Terminal Integration: Works directly within your existing terminal environment without requiring browser interfaces or separate applications, maintaining the efficiency that command-line users prefer.

- Open Source Transparency: The tool is fully open-sourced under Apache 2.0 license, allowing developers to inspect code, verify security implications, and contribute improvements to the project.

- Massive Context Understanding: Handles codebases that exceed Gemini’s 1M token context window, making it suitable for large enterprise projects and complex system analysis.

- Multimodal Capabilities: Can generate applications from PDFs or sketches using Gemini’s multimodal features, bridging the gap between visual concepts and functional code.

- Extensible Architecture: Built-in support for MCP servers and extensions allows users to connect additional tools and capabilities based on their specific needs.

Cons:

- Preview Status Limitations: Currently in preview, which means some features may be unstable or subject to change as Google continues development.

- Node.js Dependency: Requires Node.js version 18 or higher, which may necessitate system updates for users running older JavaScript runtime environments.

- Internet Connection Required: Since the tool connects to Google’s Gemini API, it requires a stable internet connection for all AI interactions, limiting offline usage scenarios.

- Google Account Dependency: Authentication requires a personal Google account, which may not align with all organizational security policies or personal privacy preferences.

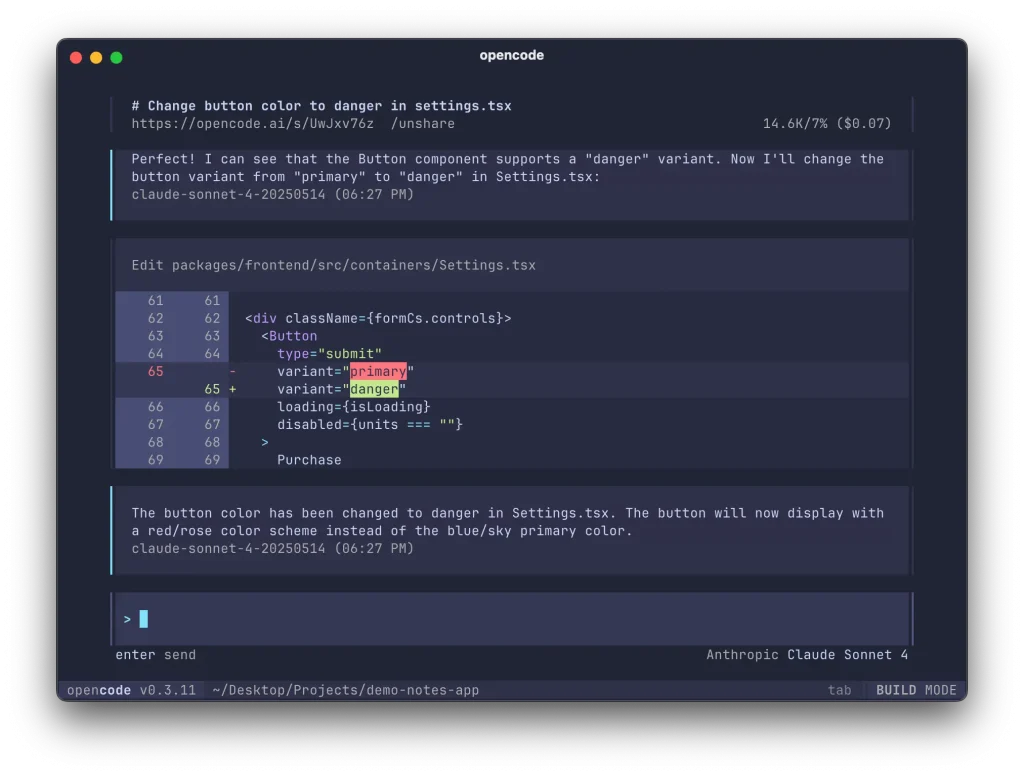

OpenCode

GitHub Stars: 29,700+

OpenCode is an open-source, AI-driven command-line tool that acts as a coding assistant, offering features like multi-session parallel workflows, AI integration with the terminal, and support for numerous language models.

Install & Usage:

# YOLO

curl -fsSL https://opencode.ai/install | bash

# Package managers

npm i -g opencode-ai@latest # or bun/pnpm/yarn

scoop bucket add extras; scoop install extras/opencode # Windows

choco install opencode # Windows

brew install sst/tap/opencode # macOS and Linux

paru -S opencode-bin # Arch Linux

Key Features:

- AI-driven coding: Provides AI-powered assistance directly in the terminal.

- Multi-session support: Allows multiple AI agents to run in parallel on the same project without conflict.

- LSP-enabled architecture: Automatically detects and configures the best tools for each language based on the Language Server Protocol.

- Shareable sessions: Generates shareable links for sessions, which is useful for debugging and collaboration.

- Deep project context: Scans project files to understand dependencies and maintain context for complex operations like multi-file refactoring.

- Wide LLM support: Integrates with a large number of LLM providers, including local models, Claude, and OpenAI.

- Version control integration: Integrates with tools like Git, allowing developers to preview and manage suggested AI changes before committing them.

- Auto-compacting: Automatically summarizes the conversation to stay within the model’s context window limit.

Pros:

- High flexibility: Supports a vast number of LLM providers, including local options, giving users control over their AI experience.

- Enhanced privacy: Keeping your workflow private is possible by using local models, which is crucial for companies with strict data policies.

- Improved collaboration: Shareable sessions and an open-source nature facilitate collaboration and learning among developers.

- Increased efficiency: Streamlines complex tasks like refactoring, project setup, and dependency installation through AI and deep context awareness.

- Transparency: AI-suggested changes can be previewed and managed like a pull request, keeping developers in control.

Cons:

- Potential complexity: While flexible, the sheer number of options and deep customization might be overwhelming for new users.

- Learning curve: Users may need time to learn how to best utilize its advanced features and integrate it fully into their workflow.

- Terminal-centric: While it has VS Code integration, its core is a terminal-based TUI, which might be less intuitive than a traditional IDE for some tasks or users.

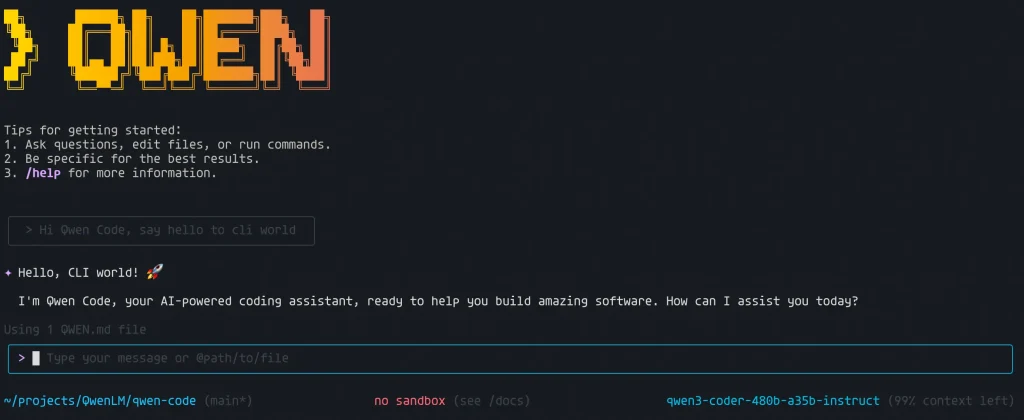

Qwen Code

GitHub Stars: 14,900+

Qwen Code is a powerful command-line AI workflow tool adapted from Gemini CLI (details), specifically optimized for Qwen3-Coder models. It enhances your development workflow with advanced code understanding, automated tasks, and intelligent assistance.

Install & Usage:

npm install -g @qwen-code/qwen-code@latest

# Navigate to your project directory and run:

qwen

Key Features:

- Agentic capabilities: Qwen Code can handle complex, multi-turn software engineering tasks that require planning, tool use, and feedback, performing like an agent within the digital world.

- Long context window: The model can process and understand large codebases, with support for contexts up to 256k tokens and even higher.

- Code understanding and editing: It can query and edit large codebases, and its parser is optimized for Qwen-Coder models.

- Multilingual support: It supports a vast number of coding languages, with some models supporting over 92 languages.

- Workflow automation: Qwen Code can automate operational tasks like handling pull requests and complex rebases.

- Multimodal capabilities: Some versions include support for vision models, allowing them to analyze images in the input.

- Efficiency: The use of a Mixture-of-Experts (MoE) architecture in some Qwen models improves efficiency by only activating a subset of the model’s parameters for a given task.

Pros:

- Open-source and flexible: As an open-source model, it offers flexibility and can be integrated into real-world workflows without vendor lock-in.

- Cost-effective: It is often more cost-effective than proprietary alternatives.

- Strong performance: It demonstrates strong performance in coding benchmarks and real-world tasks, competing with models like Claude Code.

- Developer-friendly: It is designed to be a practical and reliable AI coding assistant.

Cons:

- Complex reasoning limitations: Its ability to reason about complex, multi-step problems is not yet at the level of frontier models like GPT-5 or Gemini 2.5 Pro.

- Requires iteration: Users may need to provide more explicit guidance and iterate on prompts to fix issues, as the model can sometimes struggle with self-correction or understanding implicit dependencies.

- Higher token usage: It may issue multiple API calls per cycle, potentially leading to higher token usage, although this is an area of active optimization.

Plandex

GitHub Stars: 14,600+

Plandex is an open-source, terminal-based AI coding agent designed to plan and execute large, multi-step software development tasks across many files. It is built to understand and manage large, complex, real-world codebases with multi-million token context support.

Install & Usage:

curl -sL https://plandex.ai/install.sh | bash

# CD into a project directory

cd your-project-dir

# Initialize a git repo

git init

# Start the Plandex REPL

plandex

Key Features:

- Massive Context Management: Plandex can index directories with 20M+ tokens using Tree-sitter project maps and use an effective context window of up to 2M tokens to load only relevant information for each step.

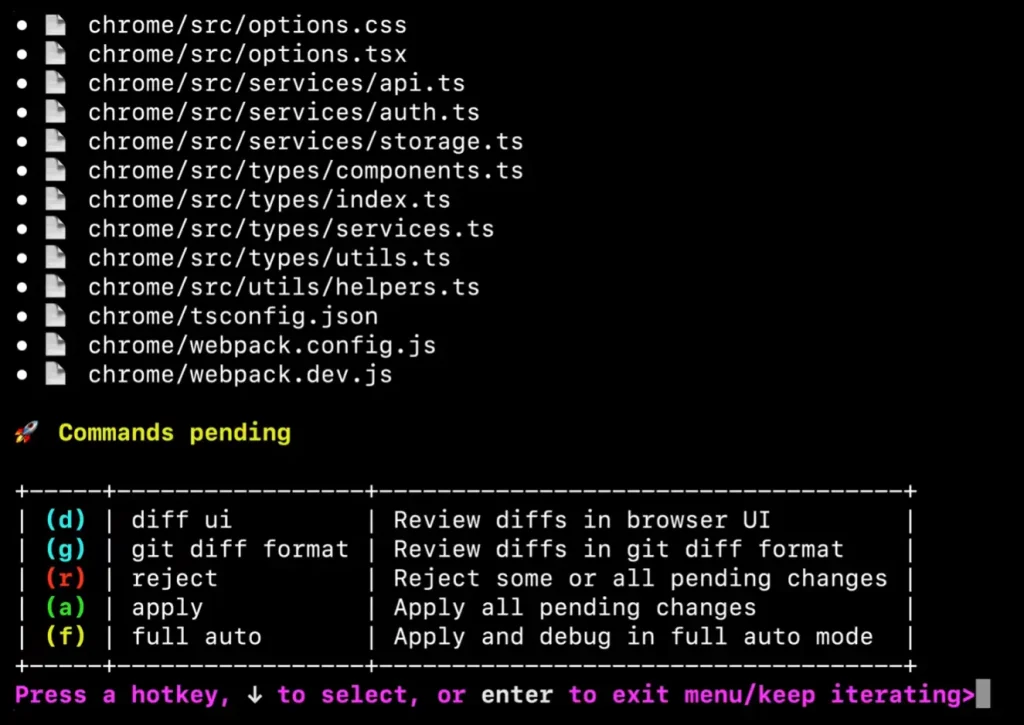

- Configurable Autonomy: Developers can choose from various modes, ranging from a fully manual step-by-step review process to a full autonomous mode where Plandex plans, executes, debugs, and commits changes on its own.

- Diff Review Sandbox: All AI-generated changes are first applied to an isolated, version-controlled sandbox. This allows developers to review the diffs across many files, test the changes, and roll back if necessary before applying them to the actual project files.

- Automated Debugging and Command Execution: Plandex can execute terminal commands (like running tests, linters, or builds) and automatically debug issues if they fail.

- Multi-Model Support: It works with various AI model providers (OpenAI, Anthropic, Google, and open-source models) and can combine different models based on the task’s complexity, cost, and speed.

- Project-Aware Chat Mode: A chat interface allows developers to discuss ideas, ask questions about the codebase, and flesh out ideas before implementation.

- Easy Installation: It offers a simple one-line CLI installation with no dependencies and supports multiple deployment options, including a local mode, self-hosted server, and cloud access.

Pros:

- Handles Large, Complex Projects: Plandex excels where other AI coding assistants often fail by effectively managing massive codebases and multi-file changes.

- Safety and Control: The sandbox environment and configurable autonomy levels give developers fine-grained control and prevent unintended changes from breaking the project.

- Flexibility in Models and Hosting: Users can choose their preferred AI models and deployment methods (cloud, local, self-hosted), ensuring data privacy and cost control.

- Autonomous Capabilities: The tool can autonomously plan, execute, and debug tasks, significantly enhancing productivity for routine or complex tasks.

- Strong Git Integration: Includes features like version control for plans, branching for exploring alternatives, and automated commit message generation.

Cons:

- Requires Prompt Engineering: Like any AI tool, the quality of the output can depend heavily on the prompt provided by the user.

- Potential Performance Issues: Self-hosted or Dockerized instances may run slower, especially on non-native architectures like running x86 Docker on an Arm Mac.

- Proprietary for Commercial Use: While the core is open-source, the cloud service and certain functionalities might require a license or payment for commercial use.

Crush

GitHub Stars: 14,400+

Crush is an open-source, terminal-based AI coding agent that uses a “glamorous” Text User Interface (TUI) to help developers with tasks like writing code, debugging, understanding codebases, and executing commands, all from within the terminal. It is designed to be highly configurable and integrate with a developer’s existing workflow and tools.

Key Features:

- Glamorous TUI (Terminal User Interface): Uses Charm’s libraries (Bubble Tea, Lip Gloss) to provide a visually appealing, split-pane interface in the terminal that separates conversation from code diffs.

- Multi-Model Support: Works with various LLM providers (OpenAI, Anthropic, Google, Groq) and supports switching between models, including local ones.

- LSP (Language Server Protocol) Integration: Leverages LSPs for deep code understanding, providing the AI with knowledge of project structure, functions, types, and dependencies to generate more accurate suggestions.

- Automated Tool Execution: The agent can run system commands (

git,docker, tests, builds) with user permission, allowing it to perform tasks autonomously. - Extensibility with MCPs: The Model Context Protocol (MCP) enables plugging in external data sources like documentation or internal APIs to provide more context to the AI.

- Session Management: Maintains context and history on a per-project basis, allowing for seamless workflow interruptions and resumptions.

- Cross-Platform: Supports macOS, Linux, Windows (PowerShell/WSL), and BSD.

Pros:

- Deep Code Awareness: LSP integration allows Crush to understand project context and structure beyond just plain text, improving the relevance and quality of AI-generated code.

- Flexibility and Control: Users have control over which LLM models to use, tool execution permissions, and the level of integration, ensuring privacy and customization.

- Performance: Built with Go, Crush is noted for its speed and responsiveness within the terminal environment.

Cons:

- (BYO-API) Cost Model: Users are responsible for managing their own API costs, which can be unpredictable or expensive.

- No Built-in IDE/Editor: While it works with existing editors, it is purely a terminal tool, which might be a limitation for developers who prefer a fully integrated graphical IDE experience.

Which AI Coding Agent is Right for You?

If you’re deeply embedded in Google’s ecosystem and want tight integration with their services, Gemini CLI is your best bet.

For teams that need enterprise-grade reliability and are willing to pay for it, Claude Code delivers consistent results. Terminal power users who want maximum flexibility should look at OpenCode.

If you’re working on massive, complex codebases where context management is critical, Plandex was built for you. For general-purpose automation and exploratory coding, OpenAI Codex offers impressive versatility.

What’s your experience with AI coding agents? Have you tried any of these CLI tools? Drop a comment below and let us know what’s working for your team.

Further Reading

If you found this list helpful, you might enjoy exploring more from our developer-focused collections:

- AI Coding Agents: Discover more open-source command-line AI coding agents.

- Free AI Tools for Developers: Discover the best free AI tools for developers.

- Vibe Coding: Discover free & open-source vibe coding AI tools.

FAQs

Q: Can I use these agents with my company’s private code?

A: Yes, and that’s one of the main advantages of open-source agents. You can run them entirely on your own infrastructure, which means your code never leaves your network. For teams with strict security requirements, this is often the only acceptable approach. Just make sure you review the specific agent’s data handling practices and configure it properly for your environment.

Q: Do I need to be an expert in AI to use these tools?

A: Not at all. Most of these agents are designed for regular developers who just want to code faster. You install them, point them at your API key or local model, and start giving them tasks. The complexity is hidden behind simple command-line interfaces. That said, understanding how to write clear prompts and when to trust (or not trust) AI-generated code is something you’ll learn through use.

Q: How do these compare to GitHub Copilot?

A: GitHub Copilot is fantastic for inline code suggestions while you type. It’s reactive. These command-line agents are proactive. You give them a goal, and they work toward it autonomously. Think of Copilot as an autocomplete system that’s really good at finishing your thoughts. Think of these agents as junior developers you can delegate entire tasks to. Many developers use both, relying on Copilot for day-to-day coding and agents for larger refactoring jobs or boilerplate generation.

Q: Are there risks to letting AI write my code?

A: Absolutely. AI-generated code can have bugs, security vulnerabilities, and logic errors. You should always review what these agents produce before merging it into your codebase. Treat AI output the way you’d treat code from a junior developer who’s smart but inexperienced. It can be a huge time-saver, but it’s not a replacement for human judgment. Run tests, do code reviews, and don’t blindly trust the AI.

Last Updated: Dec 04, 2025