Okay, let’s talk about the Model Context Protocol, or MCP. It’s become a pretty big deal in the AI world, especially for how large language models (LLMs) connect with outside data and tools.

Anthropic rolled it out in November 2024 as an open standard. Think of it like a universal way for apps to give context to LLMs so they can give better, more on-point answers.

People often call it the “USB-C port for AI apps” because it aims to be that one standard connector for AI models to safely tap into different data sources and tools without needing a bunch of custom setups.

This post will look at why MCP was started, how it developed, what it does now, and where it might be headed.

Why MCP Was Created

The main reason for creating MCP was simple: LLMs are great at reasoning, but they often can’t access the real-time data they need to give truly helpful answers.

Before MCP, connecting AI models with outside tools meant building custom solutions for each integration. This was a huge headache: time-consuming, complex, and it made scaling nearly impossible.

Anthropic wanted to solve what they called the “MxN problem”, the challenge of connecting M different LLMs with N different tools.

By creating an open standard, they hoped to make development easier, reduce the need for proprietary connections, and build a better AI ecosystem where AI assistants could easily get the data they need.

How MCP Developed

Anthropic released MCP in November 2024. While developers liked it right away, it really took off around March 2025 when popular IDEs like Cursor, Windsurf, Cline, and Goose started supporting it. As more apps adopted the protocol, server-side integration became increasingly important.

Key developments included:

- Anthropic open-sourcing the protocol

- Releasing SDKs for Python, TypeScript, Java, Kotlin, and C#

- Creating an open-source repository for MCP servers

- A major update in March 2025 that added OAuth 2.1 authorization and replaced HTTP+SSE transport with a more flexible Streamable HTTP transport

The community has been actively involved too. Microsoft maintains the C# SDK, and Spring AI at VMware Tanzu develops the Java SDK. This quick adoption shows just how much we needed a standard protocol for AI integrations.

How MCP Works

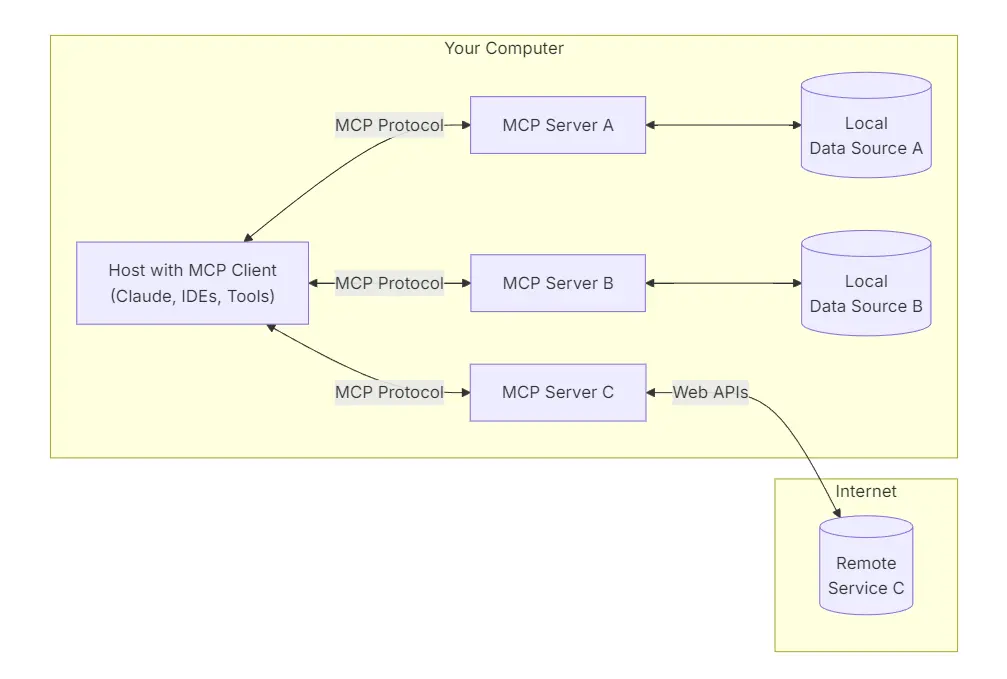

MCP uses a client-host-server setup. A host app (like Claude Desktop or an IDE) can run multiple client instances, each with a one-to-one connection to a server. The protocol defines communication rules using JSON-RPC message types.

MCP servers offer three main things:

- Resources: structured data for LLMs to use as context

- Prompts: instruction templates to guide LLMs

- Tools: functions that LLMs can run to perform actions or get information

Clients provide Roots (entry points to the file system or environment) and Sampling (allowing servers to request LLM completions from the client).

Communication happens over different transport layers, including stdio for local debugging and HTTP with Server-Sent Events or Streamable HTTP for distributed setups.

When connecting, there’s an Initialization Phase for negotiating capabilities, an Operation Phase for normal communication, and a Shutdown Phase for clean termination.

Right now, MCP is being used in:

- Development environments through IDEs like Cursor, Cline, Zed, Replit, Codeium, and Sourcegraph

- Productivity tools by connecting AI with platforms like Google Drive, Slack, GitHub, Git, Postgres, Puppeteer, Notion, and Google Calendar

- Enterprise knowledge management

- Building more sophisticated AI agents for complex tasks

There’s a growing number of pre-built MCP servers available, showing a healthy and expanding ecosystem.

What’s Next for MCP

Looking ahead, MCP will likely get several new features:

- Better remote connections with standardized authentication (especially OAuth 2.0)

- Better support for complex agent workflows, including handling permissions across hierarchical agent systems

- Expanding beyond text to support audio, video, and other formats

- Possible formal standardization through a standards body

- Exploring stateless operations for serverless environments

We’ll probably see an MCP ecosystem and marketplace develop, with platforms for discovering and sharing MCP connectors. Industry-specific MCP servers will likely emerge too.

Industry adoption should increase significantly, with major tech companies like OpenAI, Google, Microsoft, Amazon, and Apple getting on board.

MCP has a good chance of becoming the common language for AI interactions with the real world. This standardization should reduce integration friction, development time, and costs for AI applications, ultimately enabling more sophisticated AI tools.

How MCP Compares to Similar Technologies

MCP draws inspiration from the Language Server Protocol (LSP), which standardizes language support across development tools. While LSP mostly responds to user actions in an IDE, MCP supports autonomous AI workflows.

MCP offers a standardized alternative to proprietary solutions like OpenAI’s function calling and plugins. It has a broader scope, covering not just tool use but also managing resources and prompts. OpenAI has recently adopted MCP in its Agents SDK, suggesting a move toward this open standard.

Existing frameworks like LangChain and LlamaIndex provide tools to build AI applications and connect with external systems, but they often need custom connectors for each integration. MCP offers a standardized bridge that simplifies these connections.

The W3C Web of Things (WoT) is a more general protocol for IoT devices and services. While WoT overlaps with some of MCP’s goals, MCP focuses specifically on AI features like prompts, resources, tools, and sampling, and is designed to be developer-friendly.

Here’s a quick comparison:

| Feature | MCP | LSP | OpenAI Function Calling/Plugins | LangChain/LlamaIndex | W3C Web of Things (WoT) |

|---|---|---|---|---|---|

| Primary Focus | AI agent interaction with external systems | Language support in IDEs | Tool use for OpenAI models | Building LLM-powered apps | Interoperability of IoT devices |

| Standardization | Open standard | Open standard | Proprietary | Framework/Toolkit | Open standard |

| Agent Autonomy | Designed for autonomous workflows | Primarily reactive | Limited | Supports agent building | Supports agent communication |

| Scope | AI models, data, tools, prompts | Programming languages | Specific OpenAI integrations | Broad application development | Devices, services, resources |

| Community Adoption | Growing | Mature | Large within OpenAI ecosystem | Large | Established |

| Key Primitives | Resources, Prompts, Tools, Sampling | Properties, Actions, Events | Functions | Chains, Agents, Data Loaders | Properties, Actions, Events |

Security Considerations

Security is crucial when implementing MCP since it connects AI agents with sensitive data and external systems. Key security principles include:

- Getting user consent and control over data access

- Maintaining data privacy

- Being careful with tool safety (especially with code execution)

- Getting user approval for LLM sampling requests

There are several security risks to watch out for:

- Compromised MCP servers could lead to token theft

- Indirect prompt injection through AI interfaces

- Giving excessive permissions to MCP servers

- Aggregating data from multiple services

- Context leakage, exposure of sensitive metadata, and various attack vectors

To reduce these risks:

- Follow OAuth 2.1 security best practices for HTTP-based transports

- Use robust consent and authorization flows

- Document security implications clearly

- Implement appropriate access controls

- Follow security best practices in integrations

- Use TLS for network transport

- Store credentials securely

- Implement strict session management

- Audit and sanitize context

- Encrypt context data

- Enforce granular user permissions

- Monitor for suspicious activity

- Establish incident response protocols

Final Thoughts

The Model Context Protocol is a significant step toward solving the challenges of connecting AI models with the vast array of data and tools available.

It started with the need to overcome LLM isolation and the complexities of custom integrations. MCP has developed quickly with growing community support, resulting in robust features and diverse real-world applications. Looking forward, MCP will continue to evolve with new features, a growing ecosystem, and widespread industry adoption.

While it offers unique benefits compared to similar technologies, security remains critical in implementation. By following key security principles and best practices, developers can use MCP to build smarter, more capable AI systems that will shape the future of artificial intelligence.

RELATED RESOURCES

- Anthropic’s MCP Introduction: https://www.anthropic.com/news/model-context-protocol – The official announcement from Anthropic explaining the motivation and basics of MCP.

- MCP Official Documentation: https://modelcontextprotocol.io/introduction – The main resource for understanding the protocol, its architecture, and how to get started.

- MCP Specification: https://spec.modelcontextprotocol.io/ – The detailed technical specification of the protocol, including architecture, message types, and transport layers.

- MCP GitHub Repository: https://github.com/modelcontextprotocol – Access the open-source code, SDKs, and contribute to the development.

- Cisco Security Blog on MCP: https://community.cisco.com/t5/security-blogs/ai-model-context-protocol-mcp-and-security/ba-p/5274394 – A good overview of the security considerations and best practices when implementing MCP.