NeuTTS Air is an open-source, on-device, text-to-speech (TTS) model that provides super-realistic, human-like speech to any device.

It’s designed for privacy and security, with smaller models that are both faster and more affordable than many alternatives like ElevenLabs.

The model runs locally without needing to connect to the internet. This means your voice data stays on your device.

Features

- On-device voice cloning: Runs locally without sending audio to the cloud.

- 3-second voice capture: Needs only a short clean WAV file and matching transcript to clone a speaker.

- GGUF-optimized models: Available in Q4 and Q8 quantized formats for fast CPU inference via

llama-cpp-python. - Real-time performance: Generates speech in near real time on mid-range hardware.

- Watermarked output: Embeds a perceptual watermark (Perth) to deter misuse.

- Lightweight architecture: Built on Qwen 0.5B + NeuCodec for balance of speed, size, and realism.

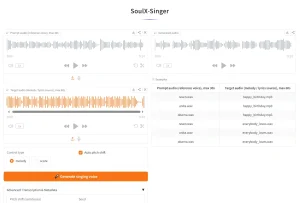

Try it Online

Use Cases

- Embedded voice assistants: Deploy custom-branded voice responses in kiosks, toys, or smart devices without cloud dependency.

- Content creators: Rapidly prototype character voices for animations, games, or audiobooks using personal or sample voices.

- Compliance-sensitive apps: Generate patient reminders or internal comms in regulated industries where data can’t leave the device.

- Low-bandwidth environments: Run TTS on remote field devices or offline laptops where internet is unreliable.

- Voice preservation: Archive a loved one’s voice from old recordings and generate new spoken messages (with ethical caution).

How To Use It

1. Visit the NeuTTS Air Hugging Face Space.

2. Upload a reference audio sample. This should be a short .wav file of the voice you want to clone.

3. Provide the reference text. Type the exact words spoken in your audio sample.

4. Enter the new text to synthesize. This is the text you want the cloned voice to speak.

5. Click the “Submit” button to generate the speech.

Running Locally

1. Install the necessary espeak for phoneme conversion:

- macOS:

brew install espeak - Ubuntu/Debian:

sudo apt install espeak - Windows: Install eSpeak NG and set environment variables:

$env:PHONEMIZER_ESPEAK_LIBRARY = "C:\Program Files\eSpeak NG\libespeak-ng.dll"2. Clone the repo from GitHub.

git clone https://github.com/neuphonic/neutts-air.git

cd neutts-air3. Install Python dependencies:

pip install -r requirements.txt4. If you want to use the quantized Q4 or Q8 models (recommended for speed):

pip install llama-cpp-pythonFor GPU acceleration (CUDA on NVIDIA, Metal on Mac), follow the llama-cpp-python install guide.

5. Use the built-in script with sample audio:

python -m examples.basic_example \

--input_text "Hello, I'm testing NeuTTS Air." \

--ref_audio samples/dave.wav \

--ref_text samples/dave.txt6. Use in your code:

from neuttsair.neutts import NeuTTSAir

import soundfile as sf

tts = NeuTTSAir(

backbone_repo="neuphonic/neutts-air-q4-gguf",

backbone_device="cpu",

codec_repo="neuphonic/neucodec",

codec_device="cpu"

)

ref_text = open("samples/dave.txt").read().strip()

ref_codes = tts.encode_reference("samples/dave.wav")

wav = tts.infer("This is my cloned voice.", ref_codes, ref_text)

sf.write("output.wav", wav, 24000)7. For lowest latency, pre-encode your reference audio once and reuse the codes. Also consider the ONNX decoder if you’re not using GGUF.

Pros

- Privacy and Security: All processing is done on-device, so your data remains private.

- Real-Time Performance: Optimized for real-time generation on mid-range devices.

- Instant Voice Cloning: Create custom voices with just a few seconds of audio.

- Built-In Security: Outputs are watermarked to ensure authenticity and responsible use.

- Open-Source: Freely available under the Apache 2.0 license.

Cons

- English Only: Currently, the model only supports English.

- Technical Setup: Requires some command-line knowledge to get started.

- Reference Audio Quality: The quality of the cloned voice is highly dependent on the quality of the reference audio.

Related Resources

- NeuTTS Air GitHub repo: Full source code and examples.

- llama-cpp-python documentation: Required for GGUF model support.

- NeuCodec model: The neural audio codec powering the output.

- Perth Watermarker: Learn how generated audio is ethically marked.

FAQs

Q: Do I need a GPU to run NeuTTS Air?

A: No. It’s optimized for CPU via GGUF and runs well on modern laptops or even Raspberry Pi 4. GPU support is possible through llama-cpp-python with CUDA or Metal, but not required.

Q: Can I clone any voice from a YouTube clip?

A: Technically yes, but results depend on audio quality. You’ll need to extract a clean 3–15 second mono clip and transcribe it accurately. Background music or overlapping speech will hurt performance.

Q: Why do I need espeak?

A: NeuTTS Air uses espeak to convert input text into phonemes, a critical step for natural pronunciation. It’s a legacy dependency but hard to replace without retraining the model.

Q: Is the voice cloning perfect?

A: It captures tone and rhythm well, but don’t expect 100% identity. It’s best for consistent, conversational speech. Not singing, shouting, or emotional extremes.

Q: Can I use this commercially?

A: Yes. The model is open-source (Apache 2.0), but remember that cloned voices may have legal or ethical implications depending on consent and jurisdiction.