CodeMachine CLI: Multi-Agent Orchestration for Production Code

Open-source platform coordinating specialized AI agents in parallel workflows to generate production-ready codebases from specification files.

CodeMachine CLI is an open-source orchestration platform that coordinates multiple AI agents to transform specification files into production-ready codebases.

It runs entirely locally and breaks down complex development tasks into manageable workflows that AI agents execute in parallel and sequential patterns.

Features

- Multi-Agent Orchestration: Coordinates multiple specialized AI agents in hierarchical and parallel configurations, with each agent handling specific aspects of development (architecture, implementation, testing, review).

- Heterogeneous Model Support: Assigns different AI models to different tasks based on their strengths. For example, use Gemini for planning, Claude for implementation, and another model for code review.

- Massively Parallel Execution: Deploys sub-agents that work simultaneously on different components (frontend, backend, database schemas).

- Persistent Workflow State: Maintains context across extended development sessions lasting hours or days.

- File-Based Memory System: Stores agent memory and context in structured files. This allows workflows to resume after interruptions and maintain consistency across all generated components.

- CLI-Native Architecture: Integrates directly with existing AI coding CLIs (Claude Code, Codex, Cursor, OpenCode) rather than requiring proprietary interfaces or web-based platforms.

- Customizable Workflow Templates: Provides a default spec-to-code blueprint while supporting the creation of custom workflows for specialized tasks, such as framework migrations, documentation generation, or legacy system refactoring.

- Production-Ready Output: Generates complete codebases with proper project structure, configuration files, testing infrastructure, and deployment scripts—not just isolated code snippets.

Use Cases

- Legacy system refactoring: Modernize outdated codebases with consistent architectural patterns.

- Framework migration: Convert projects between React, Vue, Angular or other technology stacks.

- Documentation generation: Create comprehensive technical documentation from existing code.

- Microservice architecture: Build coordinated service ecosystems with proper APIs and data flow.

- Prototype development: Generate complete working applications from concept specifications.

Case Studies

The CodeMachine team conducted a direct comparison using the Sustaina Platform, a full-stack ESG compliance system. This project was substantial, involving 7 microservices, over 500 files, and 60,000+ lines of code. The tech stack included React 18, FastAPI, NestJS, PostgreSQL, MongoDB, Redis, and Kubernetes.

The Results:

When using CodeMachine for autonomous orchestration, the entire MVP was generated in approximately 8 hours.

In contrast, a control test using standard “Regular AI Agents” (like Claude Code or Cursor) with manual orchestration and human review took significantly longer. The manual approach required 140-200 hours just for service implementation due to constant prompting and context switching. Architecture planning took humans 4-6 hours, whereas CodeMachine automated it in 30 minutes.

Key Takeaways from the Study

- Total Developer Time: Reduced from ~200-300 hours (manual) to ~8 hours (CodeMachine).

- Efficiency Gain: The autonomous approach was 25-37x faster.

- Code Consistency: The manual approach resulted in inconsistent patterns across services, while CodeMachine maintained a unified architecture throughout all components.

- Context Retention: Standard agents lost context between sessions, requiring repeated explanations. CodeMachine maintained full project context and cross-service awareness throughout the build.

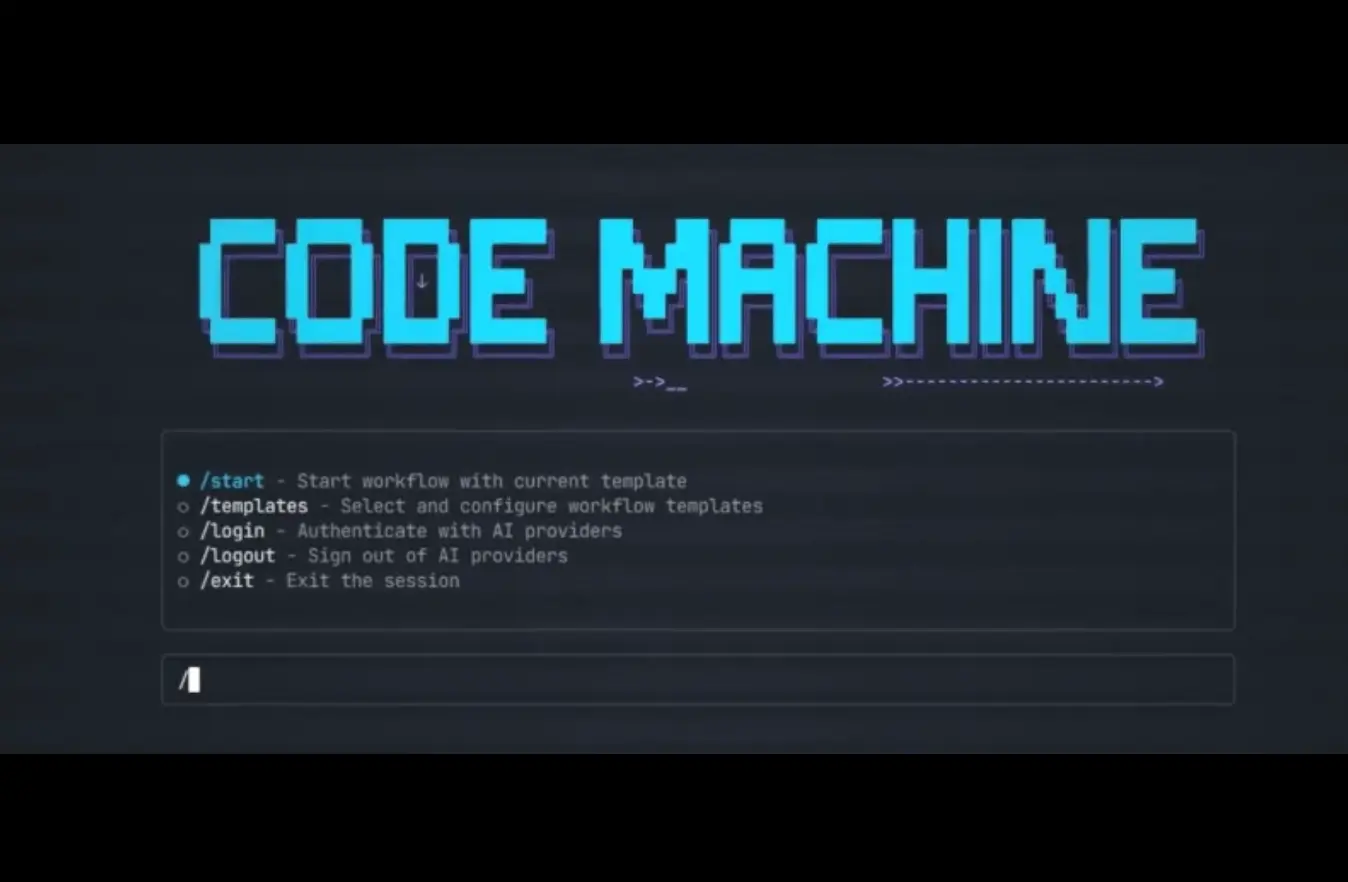

How to Use It

1. To get started, make sure you have Node.js 20.10.0 or higher and npm 9.0.0 installed. You also need at least one AI Coding Agent installed and authenticated (such as Codex CLI, Claude Code CLI, or OpenCode CLI).

2. Install the CodeMachine CLI globally:

npm install -g codemachine3. Create a new project and initialize CodeMathine.

This creates a

.codemachine/workspace in your directory.

cd my-awesome-project

codemachine4. Locate the inputs/specifications.md file created in the previous step. Open it and write your prompt. Be specific.

Example: "Create a small, single-user to-do application that MUST support create, read, update, and delete operations."

5. Start the Workflow, and the system will now architect the blueprint, formulate a plan, and begin writing code.

/start6. You can watch as it generates code, runs validation checks, and creates automation scripts for testing.

Pros

- Time savings: Reduces development time from weeks to hours for complex projects.

- Consistent code quality: Maintains uniform patterns and architecture across all generated code.

- Local execution: Keeps your code and intellectual property on your own machines.

- Multiple AI model support: Uses multiple AI models for their specialized strengths.

- Scalable architecture: Handles projects from simple scripts to enterprise-level applications.

- Production-ready: Includes testing, validation, and deployment configurations.

Cons

- Setup complexity: Requires installing multiple AI CLI agents and configuring your environment.

- Learning curve: Understanding workflow templates and specification writing takes practice.

- Hardware demands: Running multiple AI agents simultaneously requires substantial system resources.

Related Resources

- OpenCode CLI: Multi-provider AI coding assistant supporting Anthropic, OpenAI, Google, and other models.

- Claude Code Documentation: Anthropic’s official guide to their CLI-based coding assistant, one of the primary engines supported by CodeMachine.

- Gemini CLI: An open-source, command-line AI tool that brings Google’s Gemini models directly into your terminal.

FAQs

Q: Does CodeMachine require an internet connection to function?

A: Yes, the platform itself runs locally but coordinates with cloud-based AI engines (Claude Code, OpenCode, etc.) that require internet connectivity. The orchestration logic and workflow execution happen on your machine, but the actual code generation relies on these external AI services.

Q: Can I use CodeMachine for free, or does it require payment?

A: CodeMachine itself is open-source and free to use. The costs come from the AI engines it orchestrates. Claude Code requires an Anthropic subscription, Cursor has its own pricing, and OpenCode’s costs depend on which AI provider you configure. Budget according to the engines you choose and your expected usage volume.

Q: What happens if a workflow fails partway through execution?

A: The file-based memory system preserves all completed work. You can examine the logs in .codemachine/outputs/ to diagnose the failure point, adjust your specification or agent configuration, and resume from specific workflow steps rather than restarting completely. The platform maintains context about what’s already been generated.

Q: How does CodeMachine compare to using Claude Code or Cursor directly?

A: Direct use of AI coding tools requires manual orchestration. You prompt for architecture, then implementation, testing, managing context, and consistency yourself. CodeMachine automates this orchestration, maintains persistent context across all phases, runs parallel agents on different components, and ensures architectural consistency throughout. The real-world comparison showed 25-37x time savings on complex projects.

Q: Can I customize which AI models handle which development tasks?

A: Absolutely. The workflow configuration lets you assign specific engines and models to individual steps. You might use a reasoning-focused model for architecture planning, a code-specialized model for implementation, and a different model for code review. Each selected based on strengths for that particular task type.