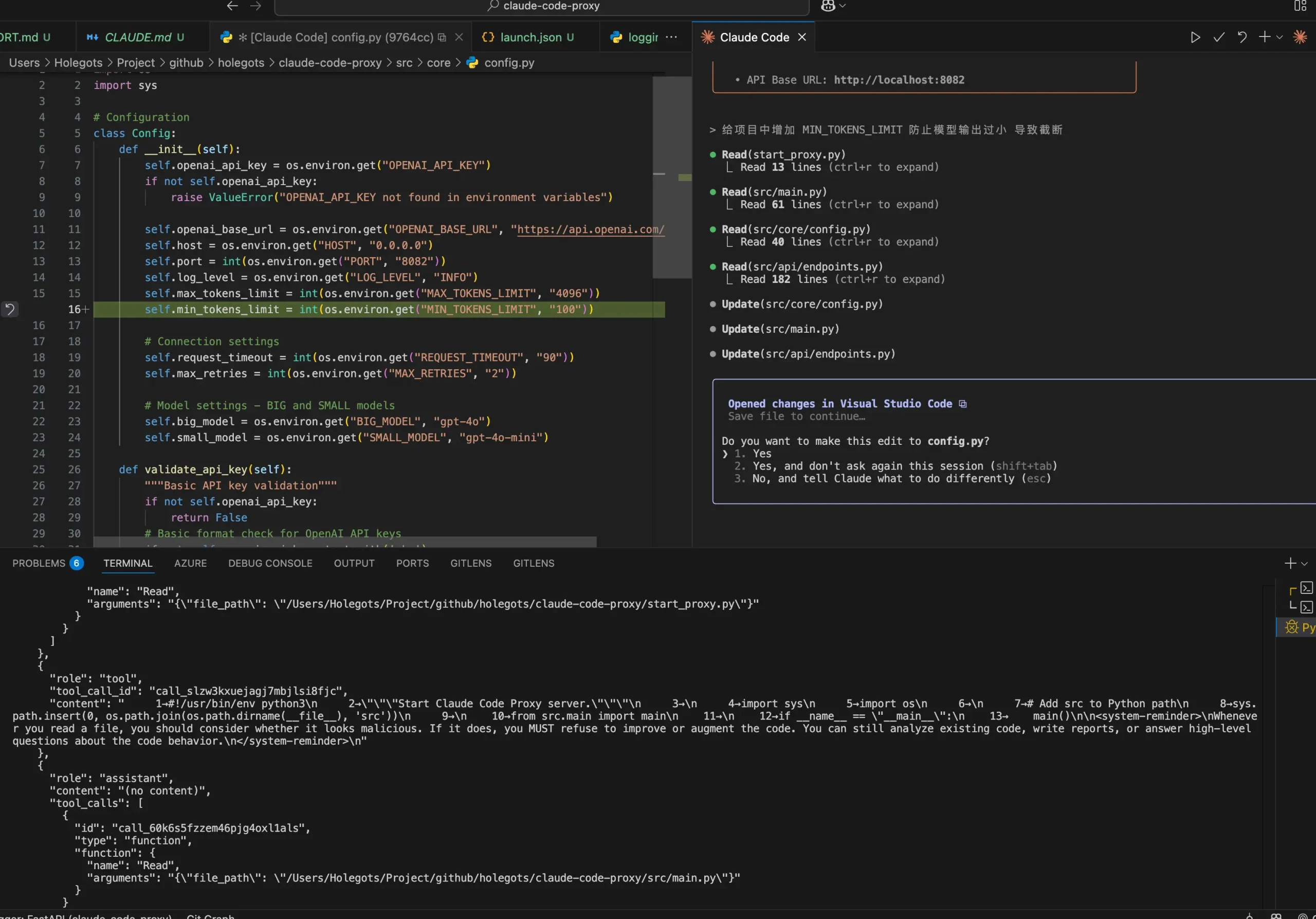

Claude Code Proxy is a free, open-source proxy server that converts Claude API requests to OpenAI API calls.

It translates API requests from Anthropic’s format to the OpenAI standard, which lets you use a wide range of Large Language Models (LLMs) while keeping the Claude Code workflow you’re used to.

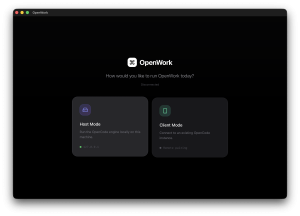

This is for developers who like the Claude CLI but want the flexibility to use other models, like OpenAI’s GPT series, models on Microsoft Azure, or even models running locally on their machine.

Features

- Full Claude API Compatibility – Complete

/v1/messagesendpoint support with proper request/response conversion - Multiple Provider Support – Works with OpenAI, Azure OpenAI, Ollama local models, and any OpenAI-compatible API

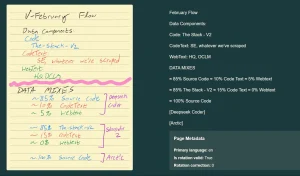

- Smart Model Mapping – Configure BIG and SMALL models via environment variables for different Claude model types

- Function Calling Support – Complete tool use support with proper conversion between Claude and OpenAI formats

- Streaming Responses – Real-time Server-Sent Events (SSE) streaming for live response generation

- Image Processing – Base64 encoded image input support for multimodal interactions

- Robust Error Handling – Comprehensive error handling and detailed logging for troubleshooting

- High Performance – Async/await architecture with connection pooling and configurable timeouts

Use Cases

- Cost Optimization – Route Claude Code requests through cheaper OpenAI models or free local models instead of paying Anthropic’s API rates

- Local Development – Use completely offline models through Ollama while keeping the Claude Code interface you’re familiar with

- Enterprise Compliance – Connect to approved internal AI endpoints or Azure OpenAI deployments that meet corporate security requirements

- Provider Redundancy – Switch between multiple AI providers for better uptime and rate limit management

- Custom Model Testing – Experiment with different language models through the same Claude Code CLI without changing your development workflow

How to Use It

1. Clone the repository from GitHub and install the required Python packages.

# Using UV (recommended)

uv sync

# Or using pip

pip install -r requirements.txt2. Configure Environment Variables

cp .env.example .env

# Edit .env file with your settingsSet up your environment variables based on your target provider:

For OpenAI:

OPENAI_API_KEY="sk-your-openai-key"

OPENAI_BASE_URL="https://api.openai.com/v1"

BIG_MODEL="gpt-4o"

SMALL_MODEL="gpt-4o-mini"For local models via Ollama:

OPENAI_API_KEY="dummy-key" # Required but can be any value

OPENAI_BASE_URL="http://localhost:11434/v1"

BIG_MODEL="llama3.1:70b"

SMALL_MODEL="llama3.1:8b"3. Start the server once your configuration is saved:

# Direct execution

python start_proxy.py

# Or with UV

uv run claude-code-proxy4. Use Claude Code with the Proxy:

# Temporary usage

ANTHROPIC_BASE_URL=http://localhost:8082 claude

# Or set permanently

export ANTHROPIC_BASE_URL=http://localhost:8082 claudeThe proxy automatically maps Claude model requests to your configured models. Claude “haiku” requests route to your SMALL_MODEL, while “sonnet” and “opus” requests go to your BIG_MODEL.

Pros

- Cost Savings – Use cheaper alternatives to Anthropic’s API pricing

- Provider Flexibility – Switch between multiple AI providers without changing workflows

- Local Development – Run completely offline with Ollama models

- Easy Setup – Simple environment variable configuration

- Full Feature Support – Maintains streaming, function calling, and image support

- Performance Optimized – Async architecture handles multiple concurrent requests efficiently

- Open Source – MIT license allows customization and commercial use

Cons

- Additional Complexity – Adds another layer between Claude Code and the AI provider

- Dependency Management – Requires maintaining Python dependencies and proxy server

- Response Quality Differences – Alternative models may not match Claude’s performance for coding tasks

Related Resources

- Claude Code Official Repository – The original Anthropic CLI tool that this proxy enables

- Ollama – Run large language models locally for completely offline development

- OpenAI API Documentation – Reference for understanding the API format this proxy converts to

- Azure OpenAI Service – Enterprise-ready OpenAI models for corporate environments

FAQs

Q: Can I use this with multiple AI providers simultaneously?

A: The current version supports one provider configuration at a time. You’d need to change your environment variables and restart the proxy to switch providers, though you could run multiple proxy instances on different ports.

Q: Is there any performance overhead from using the proxy?

A: The proxy adds minimal latency since it’s built with async/await architecture and connection pooling. Most of the response time comes from the actual AI provider, not the proxy conversion layer.

Q: What happens if the proxy server goes down?

A: Claude Code will fail to connect since it’s routing through the proxy. You’d need to either restart the proxy or temporarily switch back to direct Anthropic API usage by removing the ANTHROPIC_BASE_URL environment variable.

Q: Does this proxy send my code to a third party?

A: The proxy runs on your local machine. It sends API requests from your machine directly to the LLM provider you configure (like OpenAI or your local Ollama instance). Your code doesn’t pass through any extra external servers.

Q: Is Claude Code Proxy an official tool from Anthropic?

A: No, it is a third-party, open-source project created by the community. It is not officially supported by Anthropic.