The plan looked simple at first. I wanted to run a clean experiment: take the most popular free AI humanizers, run them through a consistent test, and see which ones could actually bypass AI content detectors like GPTZero.

Readers ask me about these tools all the time. Students hope to avoid false positives in their essays. Bloggers want a faster workflow without raising flags. Freelancers want to know which tools are reliable enough to use in client work. A data-driven comparison felt like the right answer.

So I pulled the highest-traffic humanizers on the market and set up a controlled testing pipeline. Everything was ready. But after the third tool, something felt off. After the fifth, I started reviewing my samples. After the seventh, the conclusion was hard to ignore.

This wasn’t a ranking. It was a failure report.

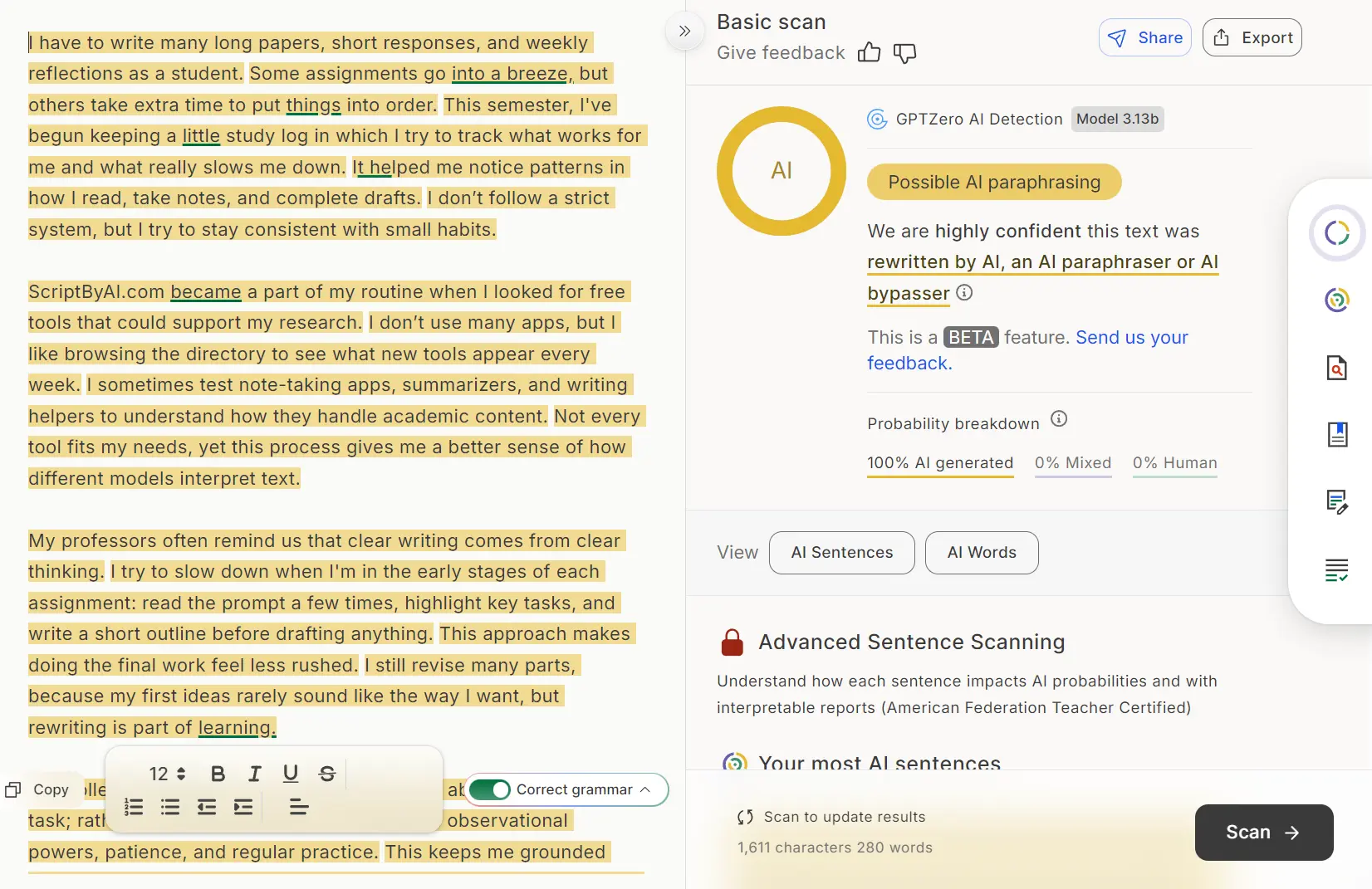

Every tool produced output that GPTZero marked as 100% AI-generated. Not “mostly AI.” Not borderline. A clean, consistent 100% for every sample.

What Drove This Investigation

AI humanizer tools exploded over the past year, and their marketing follows a familiar script. Visit any of these sites and you will see promises about ‘undetectable AI writing,’ ‘bypassing detection systems,’ and ‘human-like text generation.’

Reddit threads overflow with recommendations. TikTok videos demonstrate how to use them for school assignments. The tools present themselves as solutions to a real problem: AI detectors are everywhere now, affecting academic integrity policies, content monetization, and freelance client relationships.

But do these free tools actually work? Not according to their own descriptions of how they work. Not based on theoretical capabilities. Based on what happens when you run real text through them and test the output against an actual detector. That question deserved a methodical answer, which is why I built this test.

How I Built the Testing Process

To keep everything fair, I built a small, fixed workflow.

1. The Input Text

I used ChatGPT (GPT-5.1) to generate a 260-word sample in the style of a college student describing study habits. The writing was moderate in complexity, had mixed sentence lengths, and carried a natural pacing. I kept it clean, balanced, and neutral.

2. The Tools

Tool selection focused on global traffic volume rather than marketing claims. I pulled the ten highest-traffic platforms in the AI humanizer category using SimilarWeb data, which meant testing whatever tools ordinary users actually find and use.

This group included general paraphrasing tools, services specifically marketing themselves as ‘undetectable AI’ solutions, and platforms I kept seeing recommended in Reddit threads about bypassing detection.

All testing used free-tier accounts because that represents how most students and casual users access these tools. If a free tier cannot deliver results, the tool fails its primary audience.

3. The Procedure

For each tool:

- Paste the same student sample

- Choose its strongest rewriting mode

- Copy the output exactly

- Test it in GPTZero

- Record the score

- Read the rewritten version myself

Several tools were run multiple times using their different modes. Everything stayed consistent. Same input, same detector, same conditions.

The Results: Universal Failure

Expecting mixed results would be reasonable. Maybe seven or eight tools would fail, while two or three might score in the 60-70% range, showing at least some effectiveness. Or perhaps the premium-marketed tools would outperform the generic paraphrasers.

The actual results were starker and more consistent than any prediction: every tool produced output that GPTZero scored at exactly 100% AI-generated. The screenshot below shows a typical result:

Common Traits Across All Outputs

1. Shallow rewriting

Most tools replaced words or rearranged clauses, but the core structure stayed the same.

2. Rhythm stayed mechanical

The rewritten text lacked the uneven pacing that comes naturally in human writing.

3. No sense of human reasoning

The outputs didn’t add small reflections, subtle shifts in tone, or personal decisions.

4. Detectors analyze deeper signals

GPTZero identified statistical patterns: burstiness, predictability, and structural consistency.

5. Free tiers limit rewriting depth

Most free tools only perform light paraphrasing, far from what is needed to alter deeper writing patterns.

6. Over-substitution in some outputs

A few tools pushed too many synonyms into the text. It didn’t help. If anything, the writing felt strange and more artificial.

Why Free AI Humanizers Fail Today

After reviewing the entire dataset, it became clear that:

- They don’t adjust how ideas unfold

- They avoid changes to pacing

- They keep the text aligned with AI-generated structure

- They lack human imperfections

- Detectors have already trained on millions of similar rewrites

- Deep rewriting is far too expensive for free services

This combination leaves free AI humanizers unable to pass strong detectors.

What This Means for Students and Writers

The marketing promises and the reality exist in different universes. If you use one of these tools hoping it will make your AI-generated essay undetectable, you are walking into your submission with a false sense of security. GPTZero will flag it. Originality AI will flag it. Your professor will see a 100% AI score, and the humanizer will have accomplished nothing except wasting your time.

This creates a genuinely difficult situation for students and writers who face legitimate pressure from detection systems. Academic integrity policies increasingly rely on AI detection. Content platforms use these tools to enforce monetization rules. Clients sometimes demand proof that freelance work is human-written. The need for solutions is real, which makes the gap between these tools’ promises and their actual capabilities particularly frustrating.

Free humanizers can serve some limited purposes. They might help you rephrase an awkward sentence or break up overly complex paragraphs. For basic editing assistance, they function adequately. But for their advertised primary purpose, bypassing AI detection, they fail completely. Understanding this limitation before depending on these tools matters significantly, especially when academic or professional consequences are involved.

How This Article Changed Direction

Walking into this project, I had the structure already planned: introduction, methodology, ranked results from ten to one, recommendations for each tool’s best use cases, and a conclusion crowning the most effective option. The spreadsheet was built. The testing template was ready. I had allocated time to write detailed reviews of each tool’s interface and features.

Around test five or six, watching yet another tool produce text that GPTZero immediately flagged, I started to realize the ranked list was not happening. The data was not cooperating with the format. By test seven, I faced a choice: force the content into the planned structure and create a misleading ranking based on irrelevant criteria, or acknowledge what the testing actually showed and publish something more honest but less clickable.

Instead of forcing a ranking, I chose to publish the reality of the test.

The truth is simple:

Not a single free AI humanizer I tested bypassed GPTZero. Every output reached a 100% AI score.

This isn’t meant to criticize developers. Free rewriting tools face real limits, and detectors continue to evolve. But it’s important for readers to understand the current state of these tools and avoid relying on promises that don’t match the results.

Final Thoughts

The results of this test left me with a clearer understanding of where free AI humanizers stand today. These tools can help with light rewriting, but they are not capable of producing text that feels genuinely human under close inspection.

Free tiers simply don’t reshape the deeper structure, rhythm, or reasoning that detectors rely on. For anyone who depends on consistent accuracy, students, bloggers, or everyday writers, it’s important to treat humanizers as helpers, not solutions.

Good writing still comes from careful revision, personal insight, and a voice shaped by real experience.

If you want a better sense of how AI detectors perform under real conditions, I recently published a comparison of the most reliable detection tools available right now. You can read it here:

7 Best Free AI Content Detectors: Real Accuracy Data & Rankings

FAQs

Q: Are any free AI humanizer tools reliable today?

A: Based on my results, no free tool produced output that passed GPTZero. They can help with tone or clarity, but they cannot create text that reads like natural human writing under strict detection models.

Q: Can GPTZero be bypassed at all?

A: Not by using free, light rewriting tools. Stronger methods require deep restructuring, varied pacing, human-style reasoning, and personalized edits. These steps are far beyond what free tools currently offer.

Q: Is it safe to trust “undetectable AI” claims from online tools?

A: Most claims are marketing language. When tested with consistent input and a recognized detector, none of the tools lived up to the promise. Users should remain cautious and evaluate results themselves.

Q: What should students or writers do instead of relying on humanizer tools?

A: Use rewriting tools to clean up drafts, then revise manually. Add personal insights, varied sentence lengths, reflections, and natural transitions. Human writing still requires human input, especially under strict AI detection systems.