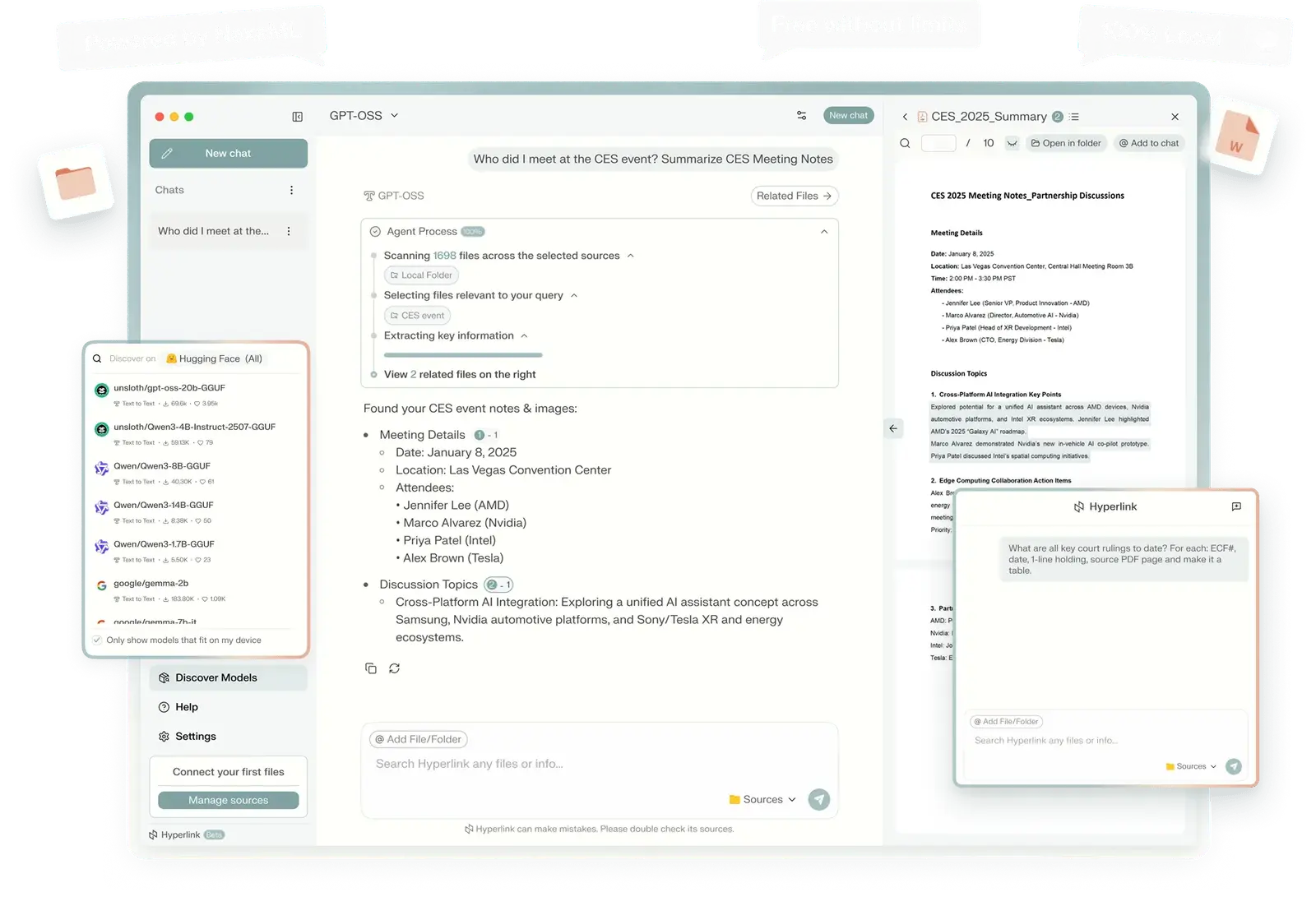

Hyperlink is a 100% free desktop AI assistant that allows you to search, analyze, and interact with your local files without sending any data to the cloud.

It runs entirely on your computer and provides cited answers from your documents, notes, and images using natural language queries and any LLMs you prefer.

The desktop app functions like having Perplexity or ChatGPT installed directly on your machine, but with one key difference: it understands your personal files.

It searches through your PDFs, Word documents, notes, screenshots, and presentations to deliver contextual answers with source citations. Everything happens offline, which means your sensitive documents never leave your device.

Features

- 100% Offline Processing: All AI computation runs locally on your CPU, GPU, or NPU.

- Natural Language Search: Ask questions in plain English instead of relying on keyword matching. The system understands context and intent.

- Cited Answers: Every response includes direct citations showing which files the information came from. You can verify sources instantly rather than trusting an AI’s memory.

- Unlimited File Context: The system indexes and searches across thousands of documents simultaneously without file caps or context limits found in cloud services.

- Automatic Syncing: Point Hyperlink at your folders and it monitors them continuously. New files get indexed automatically without manual updates.

- Vision Capabilities: Extract text from screenshots, scanned receipts, charts, and photos. Visual content becomes searchable just like text documents.

- Multiple Model Support: Download any open-source LLM from Hugging Face and run it locally.

- Cross-Platform: Available for Mac with Apple Silicon and Windows PCs with NVIDIA/AMD GPUs.

- Folder and Document Targeting: Use @ to specify particular folders or files for focused searches. This helps when you want insights from specific documents rather than your entire library.

Use Cases

- Meeting Preparation: Pull together client backgrounds, previous discussion points, and action items from past meeting transcripts and notes. Sales professionals can review everything about a client account while traveling offline.

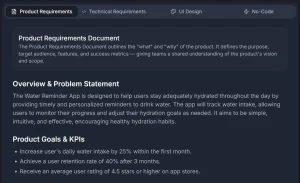

- Research and Writing: Students can query their lecture notes, textbooks, and research papers to prepare for exams or write essays. The system identifies relevant passages across multiple sources and synthesizes information with proper citations.

- Technical Troubleshooting: Developers can search through documentation, code comments, and error logs to debug issues faster. Ask about version conflicts or API changes and get answers from your local technical docs.

- Legal and Compliance Work: Review contracts, agreements, and legal documents to extract deadlines, terms, and dependencies. The offline nature makes it suitable for confidential legal materials that can’t be uploaded to cloud services.

- Personal Knowledge Management: Connect your Obsidian vault, journal entries, or personal notes to track patterns in your thinking over time. Find connections between ideas you recorded months or years apart.

How to Use It

1. Visit the Hyperlink’s official website and download the latest installer for your operating system. Mac users need Apple Silicon chips, while Windows users need at least 8GB VRAM for GPU acceleration or 32GB RAM for other configurations.

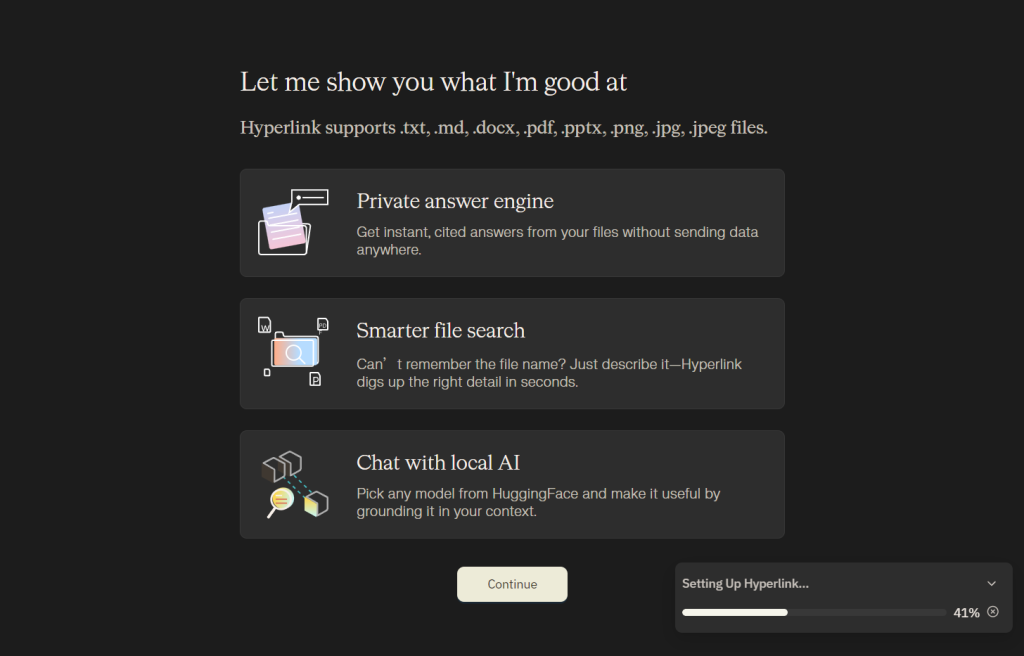

2. Launch the application and run through the initial setup wizard. The app will prompt you to download a recommended language model, but you can skip this and choose your preferred LLM later.

3. Connect your folders by selecting the directories you want Hyperlink to index. The system will begin processing your files in the background. A 1GB folder of documents typically takes 4-5 minutes to index on NVIDIA RTX hardware.

4. Start asking questions in the chat interface. Type @ followed by a folder or file name to focus your search on specific sources. Use + to add files as reference material for your query.

5. Click “Discover Models” to browse available LLMs from Hugging Face. The recommended models include gpt-oss (20B parameters) and Qwen3 (various sizes from 1.7B to 14B). Download models based on your hardware capabilities. Smaller models run faster but may produce less nuanced answers.

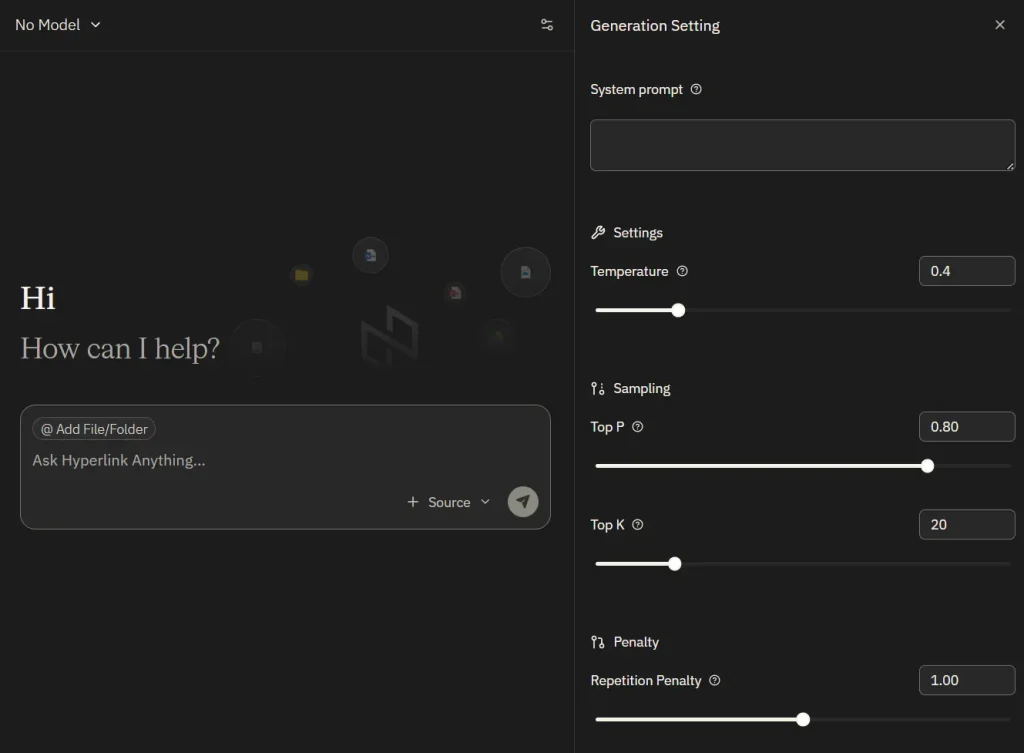

6. Adjust generation settings if needed. The app provides controls for temperature, top-p/top-k sampling, repetition penalty, and other parameters that affect response style.

7. Use the keyboard shortcut (Cmd+Shift+H on Mac or Ctrl+Shift+H on Windows) to summon the floating interface from any application. This lets you query your files without switching windows.

Pros

- Absolute data privacy: Your files never leave your computer.

- No subscription costs: Free for both personal and workspace use with no hidden limitations on features.

- Flexible model ecosystem: Choose from hundreds of open-source LLMs rather than being locked into a single provider’s technology.

- Offline reliability: Works on airplanes, in areas with poor connectivity, or anywhere internet access isn’t available.

Cons

- Hardware requirements: Windows users need substantial RAM and Mac users require Apple Silicon for optimal performance.

- Limited file type support: Currently handles PDF, Word, Markdown, text, PowerPoint, and images, but lacks support for databases.

- Intel Mac support pending: Users with Intel-based Macs must wait for future updates to run the application.

- Model management responsibility: Users must manually download and update models rather than having them automatically maintained.

Related Resources

- Nexa AI GitHub Repository: Access the open-source SDK that powers Hyperlink’s on-device AI capabilities.

- Nexa AI Discord Community: Join the official Discord server to report bugs, request features, and connect with other Hyperlink users.

- Hugging Face Model Hub: Browse thousands of open-source language models compatible with Hyperlink. Filter by size, capability, and license to find models that match your hardware and use case.

FAQs

Q: Can Hyperlink access cloud storage like Google Drive or Dropbox?

A: Not currently. The app only indexes files stored locally on your computer. Cloud integration features are listed as “coming soon” but aren’t available yet.

Q: How does Hyperlink compare to ChatGPT or Claude for document analysis?

A: Hyperlink searches your existing documents and cites sources, while ChatGPT and Claude rely on their training data and can’t access your files unless you upload them (which compromises privacy). ChatGPT and Claude typically provide more sophisticated reasoning and better writing quality, but they don’t know anything about your personal documents without uploads. Hyperlink trades some response quality for privacy and the ability to search your actual files.

Q: What happens if I delete or move files after indexing?

A: Hyperlink monitors connected folders and updates its index automatically when files change, move, or get deleted. The sync happens in the background, so your search results stay current without manual reindexing.

Q: Which language model should I download first?

A: Start with Qwen3-4B or Qwen3-8B if you have 16-32GB RAM. These models balance response quality with reasonable speed. If you have a powerful GPU with 24GB+ VRAM, try gpt-oss-20b for better answers at the cost of slower generation. Users on lower-end hardware should stick with Qwen3-1.7B to maintain acceptable performance.